Among the challenges facing robotics developers working on navigation and manipulation is the need for good data for machine learning. For applications in warehouses to factories and fields, roboticists rely on simulation to accelerate testing and address potential edge cases. NVIDIA Corp. today announced the open beta of its Isaac Sim engine, which includes several new features.

Isaac Sim, which is built on NVIDIA's Omniverse platform, now includes support for multiple cameras and sensors, compatibility with ROS 2, the ability to import CAD [computer-aided design] assets, and synthetic data generation and domain randomization. These features will help designers train a wide range of robots by deploying “digital twins,” where they are tested in an accurate virtual environment, said the Santa Clara, Calif.-based company.

“Currently, the simulation-to-reality gap means that most developers prefer to test on hardware with only limited functionality,” said Gerard Andrews, senior product marketing manager at NVIDIA. “They had only primitive tools to import robots or build complex scenes.”

“Synthetic data generation and domain randomization are important for generating datasets to train perception models for AI-based robots,” he told Robotics 24/7. “With more diverse datasets built with 'ground truth' data, we can provide more robust perception models for robots and autonomous vehicles.”

Isaac Sim built on Omniverse

Omniverse is the foundation for NVIDIA's simulators. With more realistic simulation, robotics developers can more efficiently train their robots to interact with dynamic environments, according to the company.

“Omniverse is built on Pixar's USD [Universal Scene Description],” said Andrews. “Unlike the Gazebo open-source simulation that comes with ROS that everyone learns in university, Omniverse is scalable and cloud-capable, built from the ground up to handle industrial use cases. It works on NVIDIA RTX.”

Isaac Sim uses Omniverse technologies, including advanced physics simulation enabled with GPUs [graphics processing units] with PhysX 5, photorealism with real-time ray and path tracing, and Material Definition Language (MDL) support for physically based rendering.

“Other solutions are good at individual tasks, but the cloud-native and multi-GPU Isaac Sim allows for scalability across applications,” Andrews said. “It can scale from the desktop using RTX massively across servers.”

“Developers can easily connect a robot’s brain to a virtual world through Isaac SDK and its ROS/ROS 2 interface, fully-featured Python scripting, and plug-ins for importing robot and environment models,” NVIDIA said.

Isaac Sim is now downloadable through the NVIDIA Omniverse Launcher, making it a “one-stop shop” for all kinds of simulation, noted Andrews.

Importing CAD and robot data

In addition, Isaac Sim benefits from Omniverse Nucleus and Omniverse Connectors, enabling collaborative building, sharing, and importing of environments and robot models in Universal Scene Description (USD), said NVIDIA. Isaac Sim includes the PTC OnShape CAD importer to make it easier to import 3D assets.

“Our core belief is that for multiple areas to advance, developers need to trust the basics—they need good physics, amazing rendering abilities, the ability to connect to the world, and collaborative tools,” said Andrews.

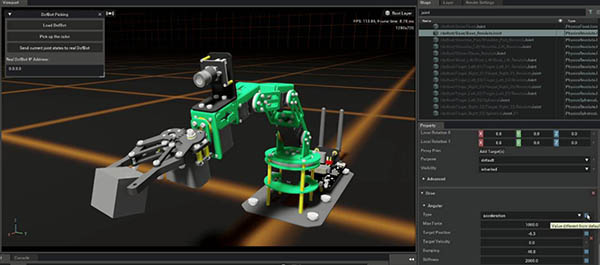

“For example, factory planners may lay out a floor plan, but then the robotics team needs to take the data to build the simulation,” Andrews said. “Omniverse's connnectors save time in transferring data between formats. It also includes the physics for robots such as the DofBot, UR10, and Franka Emika, as well as the Kaya reference robot.”

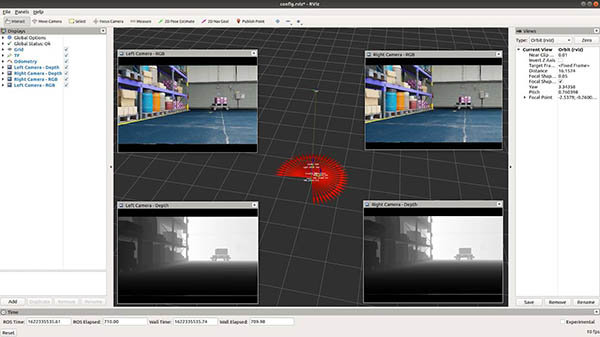

Support for multiple cameras, sensors

NVIDIA's open beta supports fisheye cameras with synthetic data. Isaac Sim also supports ultrasonic sensors, force sensors, inertial measurement unit (IMU) sensors, and custom lidar patterns.

“Isaac Sim sends multi-camera sensors to Rviz—the ROS visualization tool,” Andrews said. “3D workflows are important for robotics and autonomous vehicles in construction and other applications.”

Synthetic data generation

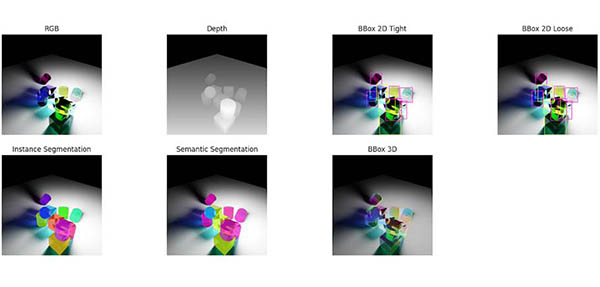

“Getting real-world, properly labeled data is a time-consuming and costly endeavor,” said NVIDIA. This can be particularly difficult in cases where robots or autonomous vehicles must be trained to operate safely around people, it said. In addition, the annotation of data involves a lot of human labor.

Isaac Sim has built-in support for simulated sensor types, including RGB, depth, bounding boxes, and segmentation, said NVIDIA. The open beta generates data in the KITTI format, and it can be used with NVIDIA's Transfer Learning Toolkit to improve model performance with data specific to a use case.

“Artificial intelligence on the industrial side needs good data rather than the big data,” claimed Andrews. “One of the biggest challenges in the gripper world is picking up translucent things. At the Seattle NVIDIA lab, researchers introduced a large-scale dataset for transparent object learning, boosting the performance for both our approach and competing methods.”

In combination with some data from real-world testing, synthetic data that is more easily labeled can help solve “long-tail problems,” Andrews said. “There are lots of software engineers but relatively few hardware guys, and you'll never have thousands of physical robots for testing. There's a whole ecosystem of companies going out there and having success with synthetic data.”

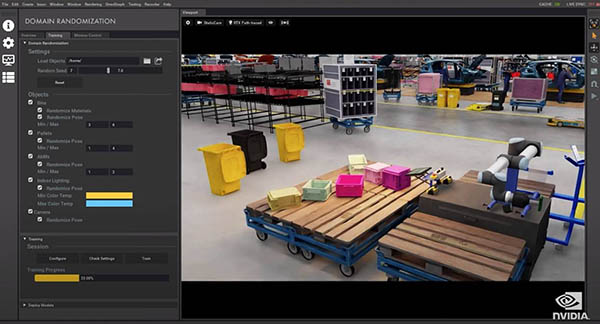

Domain randomization

Domain Randomization can vary the parameters that define a simulated scene, such as the lighting, color, and texture of materials in a scene. One of its main objectives is to enhance the training of machine learning (ML) models by exposing the neural network to a wide variety of domain parameters in simulation.

Isaac Sim can ensure that the synthetic dataset contains sufficient diversity to drive robust model performance, said NVIDIA. This will help the model to generalize when it encounters real-world scenarios. “If we can throw in a lot of data—say, by varying the color or location of a garbage can—we can test what's important for the robot to understand or to ignore,” explained Andrews.

NVIDIA said Isaac Sim open beta enables users to define a region for randomization. Developers can now draw a box around the region in the scene that is to be randomized, and the rest of the scene will remain static.

Modular design for more applications

Isaac Sim is built to address many of the most common robotics use cases including manipulation, autonomous navigation, and synthetic data generation for training data. Its modular design allows users to easily customize and extend the toolset to accommodate many applications and environments.

“Simulation with Isaac Sim can accelerate development cycles for prototyping hardware and software,” said Andrews. “It will save acquiring data for training AI rather than the costly, time-consuming process, and help validate corner cases.”

“We're excited about this release. We don't even know what people will come up with using this enabling technology to solve massive problems,” he added. “We really believe in simulation to help realize the potential of the robotics market.”

NVIDIA has made resources and a tutorial for Isaac Sim on Omniverse available online, including its presentation from its GPU Technology Conference (GTC) 2021.

About the Author

Follow Robotics 24/7 on Linkedin

Article topics

Email Sign Up