The power of video in robot monitoring

Video telemetry is a powerful information source used to understand the context in which you’re operating. At Formant we’ve worked to maximize the utilization of human visual recognition by bringing tools specifically tailored for video telemetry in robotics.

Why video matters

Video is incredibly important for understanding operations in particular. Identification of visual entities around your machine is only one part of vision data. Like any investigation, we try to answer who, what, when, where, why, and how. Video is what enables us to understand the time dimension of how a robot interaction occurred. Was the robot moving too fast during an incident? Did the arm move in a fluid or jerky manner? Did the robot just sit in one place for a long time? These questions may be impossible to answer with a slow capture or single frame picture.

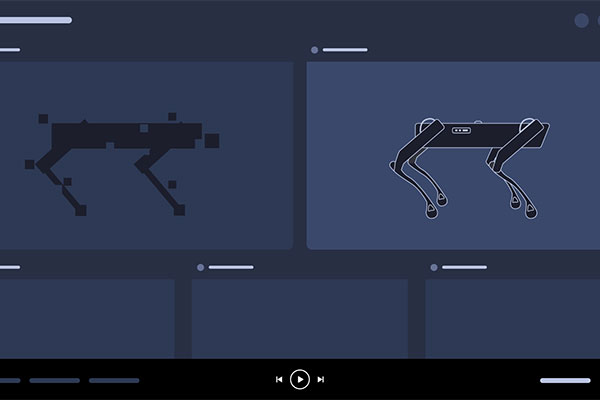

Here is an example of our high frame rate searchable video on the left and on the right is streamed still frames. As this video shows, much of the robot's motion is lost in the low framerate version. This might be material when studying dynamics, or when investigating an incident like a collision.

Our solution

Formant solves several challenges with visual data:

- Capturing USB camera video sources, ROS image streams, and offering a programmatic API for uploading images/video

- Reducing data transfer costs of images by encoding to multiple images into compressed video on device

- Handling latency issues by using statistics to try to show you a smooth live video stream slightly delayed depending on real world conditions that affect speed on the device, networking, and in the browser.

- Efficient storage of video telemetry combined with a custom video player for quick seeking and responsive observation.

- Allowing storage of non-critical video streams on device, but available for on demand request remotely when you need it

Data costs

As common as video is in our modern world on our phones and personal computers, robots historically have been limited to slow single frame image data either by limitations of tools or device power. For robots that did have the power to do more, the owner was faced with a non-ideal trade off:

- Capture the information on device and have to wait long after the robots operation to view what happened

- Capture more images and have to ingest large amounts of data and take on the cost burden of storage

- Accept capturing less data and lose visual context

This tradeoff is particularly painful for companies with devices that run on costly mobile data connections. We believe users should be able to maximize performant networks like 5G without worrying about data costs. This is one of the main reasons why Formant moved to the real-time encoding of video data at the robot device to solve these problems for a world where robots are everywhere.

Video capture for operations is challenging, here’s how we solved it

As an engineer, I want to emphasize clearly at this point in the article, that operations introduce a complex situation that cannot easily be solved by just having your robot record a long video to it’s hard drive.

- Operations often want to see things “right now” and that requires a very fast pipeline of moving things from device to browser.

- Operations want to also store what they’ve seen in the past, so you also need an effective way of storing the things you are capturing live along with an effective way to seek that information into the past. Sometimes an operator is watching a device, and wants to right then and there scrub back 10 seconds to something they just saw and send that point in time for someone else to see.

- Especially when you are troubleshooting something going wrong, you want to see every detail you can, you need something that can effectively be your eyes in a remote location. This means ideally high frame rate capture (e.g. Imagine how valuable every microsecond of video is moments before a crash).

- The real world is not graceful. Sometimes machines fail, networks fail, or you are observing on a slow device yourself. You want a video player that can handle this delivering the best experience it can.

Formant’s data capture can best be described as a stream of micro video segments that are emitted from a robot. We want the compression capabilities of video formats and we want the ingestion of this data fast enough to provide reasonably low latency for standard monitoring use cases. To view both live and historical perspectives, we created a custom video player optimized for micro video segment playback and quickly moving through the life of your robot. Knowing the importance of smooth playback for maximizing human understanding, we knew we had to get this as good as robot and viewer internet connections allowed.

Browsers are not well equipped for micro video segments playing back to back. Fundamentally they were built for video experiences like youtube and if you try to play video segments back to back on a single element, you’ll find a lot of ways this produces jerky experiences due to browser DOM behavior of video elements (which have loading behavior largely outside of programmer’s control).

We built a customer player that predictively loads micro segments so they are available just before they are needed and utilize browser Canvas drawing APIs to make the transition of video to video look seamless. We also took the time to tune this for mobile which has far fewer resources for loading multiple videos simultaneously. Operators often want to view things from the phone in their pocket or a tablet carried into the field.

Down at the device level, we use FFmpeg to encode image streams efficiently into video so that robot engineers can just focus on ROS or a programmatic image api and don’t need to worry about becoming video encoding specialists themselves.

All your video segments are inevitably stored on high performance CDN cloud storage for the frontend that can easily be accessed via S3 export if you want to use them to train your machine learning systems or if you just want to take your data out of Formant for any reason. You own your data.

Information about all that’s been ingested comes from a very quick telemetry pipeline that uses Redis caches to place video segment information in the memory of our server where they can be quickly sent up to the frontend to inform live viewing.

Performance optimization of both frontend and backend was needed to tackle the difficult challenge of high speed playback.

Watch this video to see the power of variable speed playback and how high-quality images at any speed assist in identifying and diagnosing issues.

Where we are now

Formant is excited about this new era of visual monitoring of devices where operators can collaboratively watch live video sources of whole fleets, investigators can scan through the life of a robot at 8x speed, teleoperation sessions can now be recorded, and so much more detail can now be seen about what truly happens in a day.

Optimizing the next frontier of visual data

Our endeavors to help robot operators better understand robot vision don’t stop at 2D video. New frontiers of 3D data exist that we look forward to tackling with our expanded knowledge of rich media telemetry. Integrations with external data processors of cloud storage allow Formant users to work with a whole new industry of 3rd party robot annotation services for images/video. We look forward to all this growing in 2021 and scaling the understanding of the machines we work with every day.

Article topics

Email Sign Up