SLAMcore Ltd. today announced that its spatial intelligence algorithms have been optimized for the TDA4x processor line from Texas Instruments, or TI. The London-based company said the combination will enable highly accurate and robust localization, mapping, and perception for a range of industrial automation and robotics tasks.

“TI is one of the foremost developers of microprocessors for industrial robotics,” stated Owen Nicholson, founder and CEO of SLAMcore. “We are proud to have optimized our software to work with the TDA4x family of processors and thus bring our cutting-edge spatial intelligence to the many developers for whom TI processors are their first choice.”

ProMat in March “demonstrated the significant interest in robotic and autonomous solutions in supply chain and manufacturing logistics,” said SLAMcore. The visual simultaneous localization and mapping (vSLAM) company raised Series A funding in May 2022, and it rebranded itself last month.

TDA4VM gives mobile robots real-time perception

The TDA4VM uses an integrated system-on-chip (SoC) architecture to enable real-time perception, control, and safety capabilities in automated guided vehicles (AGVs) and autonomous mobile robots (AMRs), claimed Slamcore. The processor can reduce design complexity and cost, helping to accelerate development, it said.

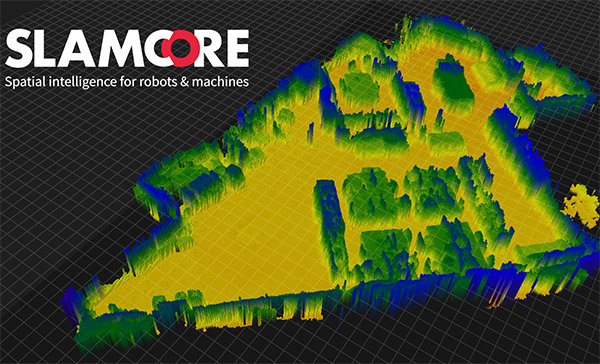

Slamcore said it has proven the new hardware/software combination in rigorous testing. It added that it demonstrated that TDA4VM can create detailed, accurate “2.5D” maps in real-time using an Intel RealSense depth camera.

“We’re creating an algorithm stack for people who don’t have access to armies of Ph.D.s.” Nicholson told Robotics 24/7. “SLAMcore has about 50 people on staff, and 80% are still writing code, including embedded software experts. It took us five years to build out our core technologies including patented algorithms with agile development practices.”

At CES in January, SLAMcore demonstrated how the “Perceive” level of its software stack enables robots to segment the objects they see in real time. The systems could give a wider berth to people than stationary objects as they planned safe movement in chaotic spaces, it said.

The “hardware zoo” at CES included systems from Texas Instruments, Qualcomm, and NVIDIA—all running the same code, said Nicholson.

“Our SDK [software development kit] makes it all possible to tune the algorithms, port them, and test them on low-cost sensors,” he added.

Vision, lidar, and 'living, breathing' digital twins

Where does SLAMcore fall in the ongoing tug of war between lidar and vision systems?

“We're not anti-lidar, but we're vision-first,” Nicholson replied. “Lidar will never be as affordable as a CMOS [complementary metal-oxide semiconductor] sensor, but we'll integrate with it. In the warehouse, if you've put down magnetic strips, you can keep using it. Customers can use our solution to fill in the gaps between where other systems work.”

While many sensors provide two-dimensional measurements of distance and position on a plane, true spatial awareness requires real-time perception and semantic understanding in three dimensions, he said.

“SLAMcore can feed into a coordinate system to get a living, breathing digital twin,” said Nicholson. “End users don't want systems to operate in silos, and warehouses are all about optimizing the flow of goods. With good vision, there's the potential for huge benefits in collaboration, mobile manipulation, and markets like agriculture.”

SaaS helps avoid 'pilot purgatory'

“The challenge for a lot of robotics startups is that they can get stuck in 'pilot purgatory' with big companies that need tens of thousands of robots to test deployments at scale,” Nicholson said. “We're not a consultancy, and we avoid non-recurring engineering work for hire, which investors don't like because it's not sustainable.”

SLAMcore charges a recurring monthly fee per seat in a software-as-a-service (SaaS) model for companies in the development phase. “We want people to move as quickly as possible to deployment, and our cost doesn't come out of money made downstream,” said Nicholson.

“When it comes to deployments, it depends on the customer's business model,” he added. “Some are excited to seee SaaS or robotics as a service [RaaS], or we can charge a one-off royalty license per unit or annual licenses by units deployed rather than users.”

“We're looking for more companies with the ambition to use vision in their core stacks,” Nicholson said. “We have a demonstration space at our headquarters in London.”

About the Author

Follow Robotics 24/7 on Linkedin

Article topics

Email Sign Up