Robotics companies are seeking to advance the ability of their technology to perceive and understand their work environments and by cultivating the capacity to adapt dynamic operating conditions on the fly. This situational awareness is enabled by a synergy between sensors and machine learning.

By giving mobile and picking robots more human-like perception of their physical environments, developers are also increasing the level of precision that users can expect from them. This in turn has expanded the range of tasks and services that robots can perform autonomously.

“Robotics is often defined as an intelligent connection between sensing and acting,” said Sean Johnson, chief technology officer at Locus Robotics. “The sensor data is fed to algorithms that allow robots to actively map their working environments, recognize known objects as well as unknown obstacles, predict the movement of objects, predict congestion, and then make the right decisions that are critical, not only for operational effectiveness and productivity, but also worker safety.”

Perception is the first step toward precision

The addition of perception has fundamentally changed in the way that autonomous mobile robots (AMRs) navigate the workplace and continues to redefine their relationship with human co-workers. No longer moving from point to point based on x, y, z coordinates plotted in space, AMRs now rely on a type of real-world situational awareness.

“Sensors enable robots to understand their environment,” noted Matt Cherewka, director of business development and strategy at Vecna Robotics. “Advanced, intelligent sensing that fuses multiple sensor types together for 3D perception and applies machine learning and AI for image recognition gives robots a more human-like understanding of their environment for real-time adaptive planning.”

“This gives robots the flexibility to perform more complex tasks and service a broader range of use cases and operating conditions, such as being able to identify and pick a specific SKU or payload from a random position that they haven’t necessarily been hard-programmed for,” he explained. “Using advanced perception in robots results in a flexible system that can adapt to the day-to-day fluctuations in business activity while operating at peak performance and uptime.”

Data-rich sensors enable greater precision

To advance the capabilities of AMRs, developers can deploy a variety of sensors to measure factors like distance, force, and torque. Of all these sensors, robot designers rely most heavily on imaging.

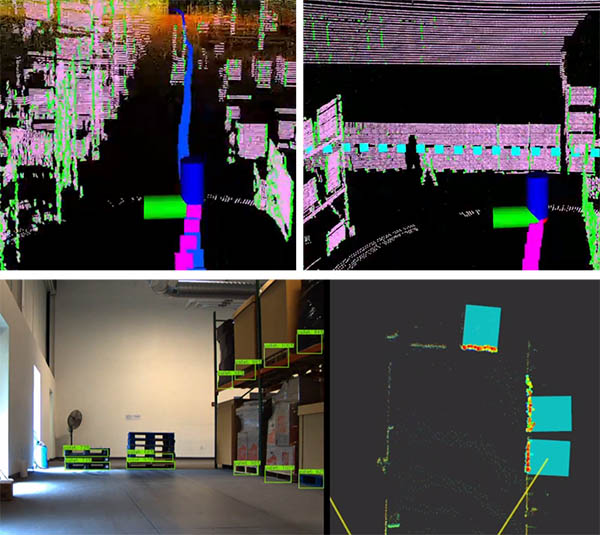

“In the past decade, the most impactful sensors have been depth imaging sensors,” said Tom Galluzzo, founder and chief technology officer of IAM Robotics. “In these applications, 3D cameras and 2D and 3D lidar are used to provide data points about the physical features of an environment for autonomous robots. These sensors are capable of measuring the distance of physical objects and providing the geometric information back to the robot.”

To further improve perception and achieve greater precision, developers are enhancing the mix and number of sensors deployed.

“For additional situational awareness, we use multiple 3D sensors to identify moving and stationary objects in proximity to our robots and safety-rated 2D lidar scanners around the robot to ensure we meet and exceed regulatory safety requirements,” said Todd Graves, chief technology officer of Seegrid. “Today, we’re also using 3D lidar advancements to augment our ability to accurately map the infrastructure around us—things like tables, racks, conveyors, and payloads.”

“This increased perception helps the robot identify and interact with its immediate surroundings and infrastructure,” he added.

Bear in mind that to extract the full benefit of the mix and number of sensor technologies in play, robot developers have also had to harness high-speed, high-capacity processors and the latest machine learning tools and techniques.

“It’s really the convergence of advanced sensing, processing, and AI trends and capabilities that enables robotic perception that senses and understands the environment and can execute the task at hand,” said Vince Martinelli, head of product and marketing at RightHand Robotics.

Perception enables dynamic, real-world applications

Leveraging intelligent perception, autonomous robots are now capable of high levels of precision, which allows them to provide value in an expanding range of industries. These include such applications as e-commerce order fulfillment, store replenishment, and material handling. Companies deploying these systems are realizing increased throughputs and higher efficiency.

One example of the role of perception can be seen in evo's deployment of the collaborative LocusBots from Locus Robotics.

The e-commerce retailer rolled out 10 AMRs in its 165,000-sq.-ft. fulfillment facility to streamline picking operations. evo needed the robots to better meet the demands of seasonal peak sales, compensate for labor shortages, and accommodate pandemic safety requirements, such as social distancing.

With perception capabilities, the LocusBots automatically learned the most efficient travel routes through the warehouse and were able to handle multiple container configurations. Furthermore, the collaborative mobile robot met a wide range of tote and multi-bin picking requirements, and its lightweight structure enabled it to safely operate alongside workers, even in tight working conditions.

The LocusBot system was implemented in 53 days. Workers who were picking an average of 35 units per hour (UPH) with manual carts started to pick an average of 90 UPH with LocusBots. Some workers picked as many as 125 UPH.

“In the future, we see an opportunity in directed putaway, as well as transporting goods from one part of the warehouse to another,” said Spencer Earle, director of supply chain at evo.

ZF Group uses machine vision for manufacturing

In an example of autonomous robot perception at work in an industrial setting, German automotive supplier ZF Group wanted to automate machine tending in its high-volume milling stations, where it manufactures gears.

To perform this application, ZF deployed a system that consisted of a MIRAI kit from Micropsi Industries, which included a control box and a camera. It also included a Universal Robots UR10e collaborative robot arm, an OnRobot force-torque sensor, and a Schunk gripper.

The MIRAI vision-based control system uses artificial intelligence to enable robots to deal with complexity in production environments. Once fitted with MIRAI, a robot can perceive its workspace and correct its movement where needed to perform a task. A background in engineering or AI is not required to train or retrain MIRAI.

The metal rings in the crate arrive in layered beds, with the workpieces laid closely together on their flat sides. The UR10e cobot has been programmed via its native controller to move above individual rings in the crate.

Once the robot is above a ring, the MIRAI system takes control, moving the UR10e to the nearest ring and placing the gripper in gripping position. After this position is reached, the robot’s native system reassumes control. The cobot then picks up the ring, moves it to the conveyor belt, and places it on the belt.

Micropsi said the MIRAI-based picking system proved to be faster and more reliable than classic automation, which would be either unable to deal with these complexities or very expensive to set up. Even when customized, classic automation systems would be tailored for this task alone and no others, said the company.

About the Author

Follow Robotics 24/7 on Linkedin

Article topics

Email Sign Up