The next generation of robots could benefit from open-source software designed to take advantage of the latest compute hardware. NVIDIA Corp. and Open Robotics today announced two features in the Humble ROS 2 release intended to improve performance on platforms that offer hardware accelerators.

“The Robot Operating System evolved in a CPU-only world, but newer SoC architectures with onboard hardware accelerators required us to make changes to maximize efficiencies,” said Gerard Andrews, senior product marketing manager for robotics at NVIDIA. “We identified two things for this release—type adaptation and type negotiation.”

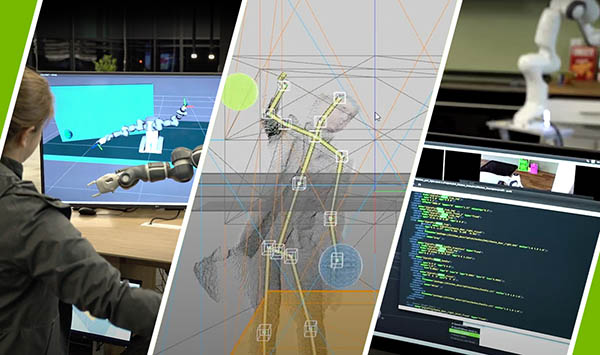

The features are intended to help robotics developers incorporate machine learning and computer vision into ROS-based applications and will be available in the next Isaac ROS Developer Preview in late June, he said.

Type adaptation eliminates processing overhead

While hardware accelerators often require data to be translated into another format to deliver optimal performance, type adaptation (REP-2007) allows ROS nodes to work in the format best suited for the hardware, wrote Andrews in a blog post.

“This allows a processing pipeline, a graph of nodes, using the adapted type to eliminate memory copies between the CPU and the memory accelerator,” he said. “Unnecessary memory copies consume CPU compute, waste power, and slow down performance, especially as the sizes of the images increase.”

“You don't want to waste resources, because then the hardware isn't doing any inferences—it's just changing data formats,” Andrews told Robotics 24/7. “Instead of copying data back to CPUs and the next node to GPUs [graphics processing units], you can put together a sequence of nodes and only copy it back to the CPU when the GPU is done, when it is back with the ticket.”

Type negotiation identifies best formats for performance

In collaboration with Open Robotics, NVIDIA is also making type negotiation (REP-2009) available in the latest ROS release.

According to Andrews, this feature “allows the different ROS nodes in a processing pipeline to advertise their supported types so that the formats yielding the ideal performance are chosen. The ROS framework performs this negotiation process and maintains compatibility with legacy nodes that don’t support negotiation.”

The Santa Clara, Calif.-based company claimed that the new features reduce software overhead, allowing developers to take full advantage of hardware accelerators and platforms such as NVIDIA Jetson Orin.

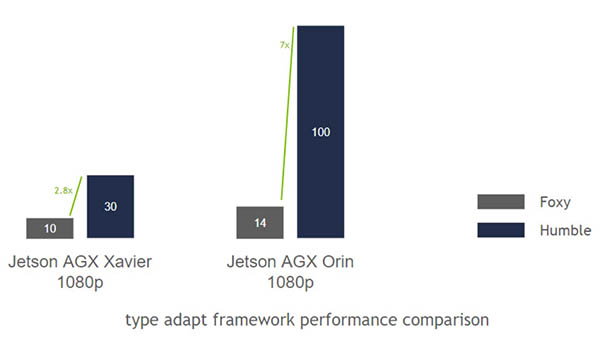

“For example, in NVIDIA Isaac Transport for ROS, or NITROS, we have a certain number of pipelines from a camera across to apriltag or a specific DNN [deep neural network],” Andrews explained. “We'll release a series of these over the coming months. By staying on the GPU, we're seeing up to a 3X speedup on Xavier and a 7X improvement on Orin on our type adaptation benchmark, which we tested running ROS 2 Foxy and Humble. Hardware acceleration is a hot topic in the ROS world right now.”

NVIDIA and Open Robotics continue close collaboration

The new features within ROS 2 are intended to ensure compatibility with existing tools, workflows, and codebases, said the partners.

“As ROS developers add more autonomy to their robot applications, the on-robot computers are becoming much more powerful,” stated Brian Gerkey, CEO of Open Robotics. “We have been working to evolve the ROS framework to make sure that it can take advantage of high-performance hardware resources in these edge computers.”

“Working closely with the NVIDIA robotics team, we are excited to share new features in the Humble release that will benefit the entire ROS community’s efforts to embrace hardware acceleration,” added Gerkey.

“We kicked off the project to work on hardware acceleration for ROS 2 in October 2021,” Andrews said. “Our engineers work with those of Open Robotics—it's a small team, but it's good to know that the work you do will go out to a huge community.”

“We know a lot about offloading CPUs to GPUs, and while not everyone might use ROS 2, we think that even proprietary software developers will have familiarity with how it's done in ROS and reuse parts of our releases,” he noted.

NVIDIA introduces NITROS, GEMs for robot perception

Andrews described the NITROS as the implementation of the type adaptation and negotiation features. The first NITROS release will include three processing pipelines made up of GEMs, or Isaac ROS hardware-accelerated modules, and NVIDIA plans to release more later this year.

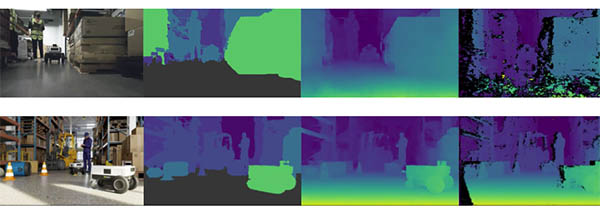

The next Isaac ROS release will also contain two new DNN-based GEMs designed to help roboticists with common perception tasks. The first one, ESS, is a DNN for stereo camera disparity prediction. The network and partners' cameras can provide vision-based continuous depth perception for autonomous mobile robots (AMRs), said Andrews.

The other GEM, Bi3D, is designed for vision-based obstacle prediction. The DNN, based on work from NVIDIA Research, is optimized to run NVIDIA's deep learning accelerator (DLA) on Jetson to conserve compute resources, said Andrews.

“The network can predict if an obstacle is within one of four programmable proximity fields from a stereo camera,” he said. “It's pretrained for commercial robots using both synthetic and real data to train the models.”

Bi3D and ESS join stereo_image_proc, a previously released computer vision stereo depth disparity routine, to offer three diverse, independent functions for stereo camera depth perception, said NVIDIA.

GEMs to help developers get started with AI perception

“We already have nine or 10 GEMs,” said Andrews. “Without them, the cost of deployment is more, and robots need structured environments rather than robots adapting to the environment.”

“More than half of mobile robots are still AGVs [automated guided vehicles]—that's where these toolboxes can bring more to bear for innovation,” he said. “Compatibility lets us leverage everything from Orin on the compute side and RTX to Omniverse for simulation and EGX edge servers to build different SDKs [software development kits] for different applications and markets.”

NVIDIA said ROS developers interested in integrating AI perception into their products can get started now with Isaac ROS GEMs.

NVIDIA presents research at ICRA

NVIDIA is also presenting its latest research and workshops around robotics this week at the International Conference on Robotics and Automation (ICRA), held by the Institute of Electrical and Electronics Engineers (IEEE) in Philadelphia.

The NVIDIA Robotics Research Lab plans to present papers on how robot skills can be learned in NVIDIA Isaac Sim photorealistics, physics-accurate simulation and then transferred to real systems and environments, or Sim2Real. It will also discuss how accurate localization can improve autonomous vehicle and indoor navigation, as well as the use of AI and pose estimation for robotics development.

NVIDIA will also present on how service robots can reliably manipulate deformable objects, such as sponges, mops, or bedding, in warehouses or hospitals. It will describe how systems can recognize and rearrange objects inro meaningful arrangements like table settings—a vision-based task that was previously impossible.

In addition, the company's researchers will present a novel model-predictive control framework to bridge the gap between grasp selection and motion planning for human-robot handoffs. They have developed a new simulation benchmark, HandoverSim, enabling the robotics community to standardize and reproduce evaluation of receiver policies.

NVIDIA's sessions at ICRA will include multiple workshops on simulation and quadruped locomotion, scaling robot learning, intelligent control methods for human-robot interaction, structured inductive bias for robot learning, and using physics accelerators for reinforcement learning. Its experts will also present on bi-manual manipulation, representing and handling deformable objects, and robotics for climate change.

The company is exhibiting at Booth 200, where representatives from Connect Tech, an elite member of the NVIDIA Partner Network, will be available to answer questions. The NVIDIA robotics research team is a part of NVIDIA Research, which comprises 300 researchers around the globe focused on topics spanning AI, computer graphics, computer vision, and self-driving cars.

About the Author

Follow Robotics 24/7 on Linkedin

Article topics

Email Sign Up