Editors’ Picks

Found in Robotics News & Content, with a score of 24.94

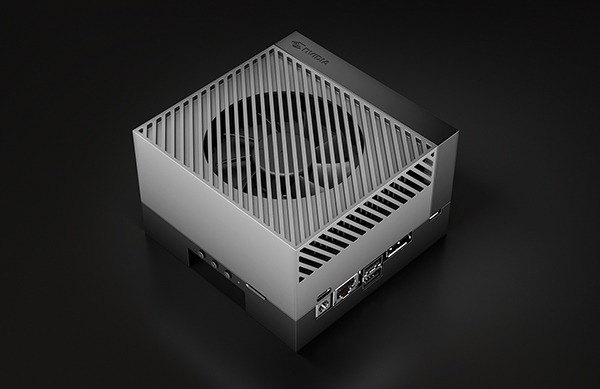

Customers from Japan to Ecuador and Sweden are using NVIDIA DGX H100 systems like AI factories to manufacture intelligence, according to NVIDIA. They’re creating services that offer AI-driven insights in finance, healthcare, law, IT and telecom — and working to transform their industries in the process. Among the dozens of DGX H100 use cases, one aims to predict how factory equipment will age, so tomorrow’s plants can be more efficient. Called Green Physics AI, it adds information like an object’s CO2 footprint, age, and energy consumption to SORDI.ai, which claims to be the largest synthetic dataset in manufacturing. The dataset…

Found in Robotics News & Content, with a score of 24.93

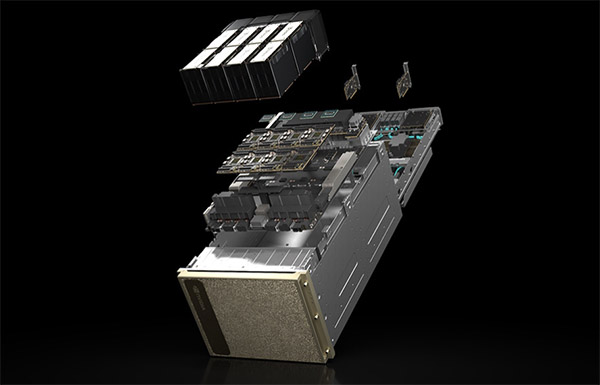

NVIDIA unveiled NVIDIA DGX A100, the third generation of an advanced AI system, delivering 5 petaflops of AI performance and consolidating the power and capabilities of an entire data center into a single flexible platform for the first time. Immediately available, DGX A100 systems have begun shipping worldwide, with the first order going to the U.S. Department of Energy’s (DOE) Argonne National Laboratory, which will use the cluster’s AI and computing power to better understand and fight COVID-19. “NVIDIA DGX A100 is the ultimate instrument for advancing AI,” says Jensen Huang, founder and CEO of NVIDIA. “NVIDIA DGX is the…

Found in Robotics News & Content, with a score of 24.53

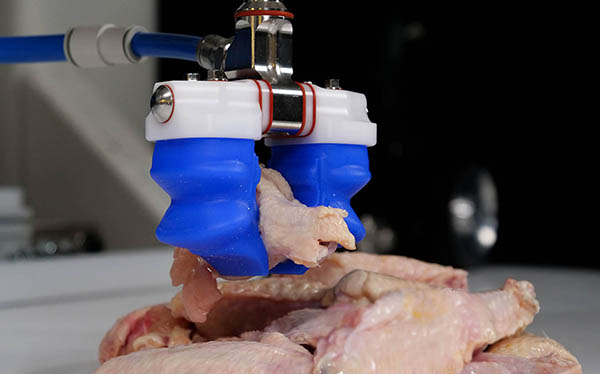

…promise for multibillion-dollar industries. Soft Robotics Inc. is using NVIDIA Isaac Sim to close the simulation-to-reality gap in pick-and-place operations for packaged food. “We’re selling the hands, the eyes, and the brains of the picking solution,” said David Weatherwax, senior director of software engineering at Soft Robotics. Unlike other industries that have adopted robotics, the $8 trillion food market has been slow to develop robots to handle variable items in unstructured environments, said Bedford, Mass.-based Soft Robotics. Founded in 2013, the company recently secured $26 million in Series C funding from Tyson Ventures, Marel, and Johnsonville Ventures. Getting a grip…

Found in Robotics News & Content, with a score of 24.07

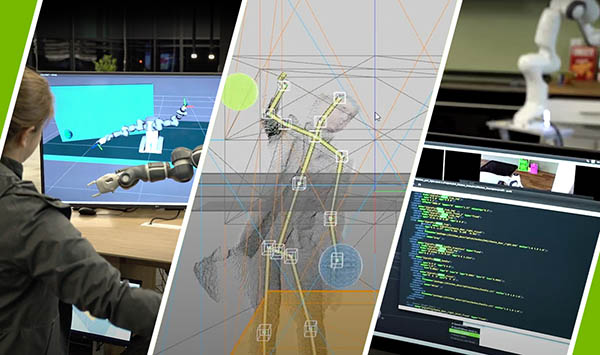

…simulation to accelerate testing and address potential edge cases. NVIDIA Corp. today announced the open beta of its Isaac Sim engine, which includes several new features. Isaac Sim, which is built on NVIDIA's Omniverse platform, now includes support for multiple cameras and sensors, compatibility with ROS 2, the ability to import CAD [computer-aided design] assets, and synthetic data generation and domain randomization. These features will help designers train a wide range of robots by deploying “digital twins,” where they are tested in an accurate virtual environment, said the Santa Clara, Calif.-based company. “Currently, the simulation-to-reality gap means that most developers…

Found in Robotics News & Content, with a score of 24.01

…machines, as well as general manager for robotics at NVIDIA Corp., has some ideas. Gopalakrishna leads the business development team that focuses on robots, drones, the industrial Internet of Things (IIoT), and enterprise collaboration products. He holds a bachelor of engineering from the National Institute of Engineering in India. Before joining NVIDIA in 2016, Gopalakrishna was the global head of platform and technology strategy, leading the chief technology officer's office at Sony Mobile Communications. He was responsible for products ranging from phones, tablets, and wearables to IoT platforms. Gopalakrishna shared his thoughts with Robotics 24/7 about Santa Clara, Calif.-based NVIDIA's…

Found in Robotics News & Content, with a score of 23.57

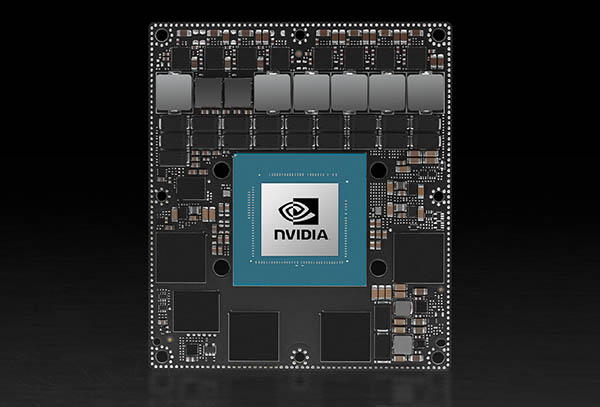

…designed to take advantage of the latest compute hardware. NVIDIA Corp. and Open Robotics today announced two features in the Humble ROS 2 release intended to improve performance on platforms that offer hardware accelerators. “The Robot Operating System evolved in a CPU-only world, but newer SoC architectures with onboard hardware accelerators required us to make changes to maximize efficiencies,” said Gerard Andrews, senior product marketing manager for robotics at NVIDIA. “We identified two things for this release—type adaptation and type negotiation.” The features are intended to help robotics developers incorporate machine learning and computer vision into ROS-based applications and will…

Found in Robotics News & Content, with a score of 23.47

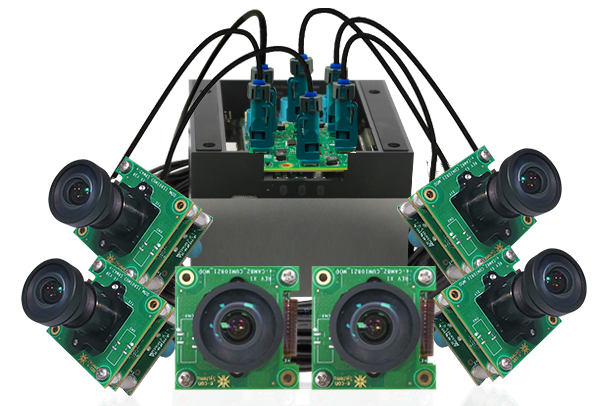

…week launched the NileCAM81_CUOAGX, a 4K multi-camera system for NVIDIA's Jetson AGX Orin and Xavier. The company said it is suitable for autonomous mobile robots, agricultural robots, and smart trolleys. NileCAM81 can also be used in surround-view systems, smart checkout systems, smart traffic management, and sports broadcasting and analytics. “NVIDIA Jetson ORIN and AGX Xavier are the most powerful energy-efficient processors for autonomous machines,” stated Gomathi Sankar, head of the industrial cameras business unit at e-con Systems. “Such machines require superior-quality video feed for object detection, recognition, and characterization. 4K video feed and HDR [high dynamic range] performance are essential…

Found in Robotics News & Content, with a score of 22.99

…oceanic monitoring by “sailing seas of data,” according to NVIDIA Corp. The startup’s nautical data-collection technology has tracked hurricanes in the North Atlantic, discovered a 3,200-ft. underwater mountain in the Pacific Ocean and begun to help map the entirety of the world’s ocean floor. Alameda, Calif.-based Saildrone develops autonomous uncrewed surface vehicles (USVs) that carry a wide range of sensors. NVIDIA Jetson modules process its data streams for artificial intelligence at the edge. They are optimized in prototypes with the NVIDIA DeepStream software development kit (SDK) for intelligent video analytics. Saildrone said it is seeking to make ocean data collection…

Found in Robotics News & Content, with a score of 22.95

…At its Spring 2022 GPU Technology Conference, or GTC, NVIDIA Corp. today announced the availability of its Jetson AGX Orin developer kit and its Isaac Nova Orin architecture for autonomous mobile robots. “Modern fulfillment centers are evolving into technical marvels — facilities operated by humans and robots working together,” said Jensen Huang, founder and CEO of NVIDIA. He said new processors, software, and simulation capabilities will lead to “the next wave of AI,” including robots able to “sense, plan, and act.” NVIDIA claimed its invention of the graphics processing unit (GPU) in 1999 “sparked the growth of the PC gaming…

Found in Robotics News & Content, with a score of 22.95

…barriers to deployment. At CES in Las Vegas today, NVIDIA Corp. announced major updates to Isaac Sim, its tool for building and testing virtual robots across varied operating conditions in simulated environments. The installed base of industrial and commercial robots will grow by more than sixfold—from 3.1 million in 2020 to 20 million in 2030, according to ABI Research. “Broadly speaking, the challenge [for robotics developers] falls into two categories,” said Deepu Talla, vice president and general manager for embedded and edge computing at NVIDIA. “The first is continuous development and operations of robots.” “The second is runtime on physical…

Found in Robotics News & Content, with a score of 22.84

The NVIDIA Isaac robotics platform is tapping into the latest generative AI and advanced simulation technologies to accelerate AI-enabled robotics. At GTC 2024, NVIDIA announced Isaac Manipulator and Isaac Perceptor - a collection of foundation models, robotics tools and GPU-accelerated libraries. During Monday’s keynote, NVIDIA founder and CEO Jensen Huang demonstrated Project GR00T, a general-purpose foundation model for humanoid robot learning. Project GR00T uses various new tools from the NVIDIA Isaac robotics platform to create AI for humanoid robots. “Building foundation models for general humanoid robots is one of the most exciting problems to solve in AI today,” Huang said.…

Found in Robotics News & Content, with a score of 22.54

…and work together in an office. Omniverse This month NVIDIA GTC also took place online, with CEO Jensen Huang delivering his keynote from his own private kitchen. One of the key announcements is Omniverse, a real-time raytraced design environment. Jensen Huang calls it “an open platform for collaboration and simulation.” This is a new platform for editors and viewers to share scenes and models. The content is rendered in raytracing so what you see inside Omniverse—such as light bouncing behavior, reflections, and shadows—is physically accurate. In terms of compute cycle, these features are expensive to generate, so Omniverse uses a…