Editors’ Picks

Found in Robotics News & Content, with a score of 6.14

…Pridmore: Since our system uses suction cups and advanced perception pipelines to understand the size, shape, and weight of each item, our robots are able to handle delicate items like glasses gently and precisely at speed. What's the throughput with the picking system compared with manual processing? Pridmore: The OSARO Robotic Autobagging Solution was designed to meet or exceed human operator performance, not just in throughput, but in quality, accuracy, and uptime. For “last-meter fulfillment,” is there a handoff from or to people at other stages? How much human supervision is necessary? Pridmore: No, the OSARO Robotic Bagging System automatically…

Found in Robotics News & Content, with a score of 6.14

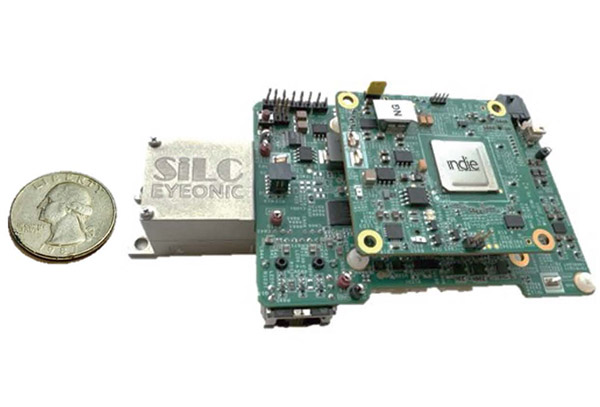

…time-of-flight (ToF) sensors, assserted Muenster. He cited its long-range perception with high precision, immunity to interference, and ability to provide per-point instantaneous velocity and motion measurement. “Current lidar is suboptimal—it's prone to multi-user interference, needs high wattage, and has a large form factor, so it's not scalable to mass-market deployment,” said Muenster. “We know the limits of ToF, and FMCW is becoming standard. It's the same as with radar, which was first pulsed and is now coherent.” “SiLC has a unique business model in that we're building a semiconductor, not a lidar box for one application or vertical,” he said.…

Found in Robotics News & Content, with a score of 6.10

…Holdings Inc. Scout and TASA Cypress, Calif.-based SARA's Artificial Perception and Threat Awareness (APTA) Division develops sensing and collaboration systems using AI and edge processing for both the military and commercial aerospace markets. They include radio-frequency geolocation systems, acoustic-based threat-detection systems, and other sensors for unmanned aircraft systems (UAS). The company said it has more than 25 years of experience in developing airborne acoustic sensors needed for beyond visual line-of-sight (BVLOS) flights, addressing business and regulatory hurdles to commercial UAS operations. SARA said its Terrestrial Acoustic Sensor Array (TASA) can effectively identify other aircraft and maintain a safe distance from…

Found in Robotics News & Content, with a score of 6.09

…so that mowers know the boundaries of grass through perception only. With a camera and our full stack, the robot could explore a yard, build a map, and mow in one session.” “We are building large world models—LWMs—to power robots with an AI brain so they can work in all types of outdoor environments,” added Murty. “What Open AI did with language, Electric Sheep wants to do for outdoor robotics—under the air cover of a profitable business model.” “This is different from large language models,” he said. “We're building a world model from first-order physics principles rather than taking noisy…

Found in Robotics News & Content, with a score of 6.06

…customers. “How the semantic information is populated on the perception AI side, how we plan paths, how we navigate, all of that is tied to the fleet,” Velusamy said. “What information do the fleets need as well? They're architected together and what it ultimately gives us is to have the right control signals for the optimization that runs in the cloud. That means better optimization, better workflows, better ROI and better planning.” Want to learn more about fleet management? This article was featured in the June 2024 Robotics 24/7 Special Focus Issue titled “Orchestrating mobile robot fleets for success.”

Found in Robotics News & Content, with a score of 6.03

…Boulder, Colo.-based startup specializes in motion planning, control, and perception for robots. It said its flagship product, MoveIt Studio, “revolutionizes the way operators plan and execute robotic tasks with unparalleled precision and efficiency.” In April, PickNik partnered with Motiv Space Systems Inc. to integrate its software with Motiv's xLink robot arms. SBIR to enable PickNik fault detection, reporting NASA said it increasingly needs highly autonomous robots to maintain critical spaceflight hardware, and its first SBIR award to PickNik Robotics is for a project titled “A Framework for Failure Management and Recovery for Remote Autonomous Task Planning and Execution.” PickNik is…

Found in Robotics News & Content, with a score of 6.03

…company is testing its systems and next-generation approaches to perception and decision-making in the San Francisco Bay area, Pittsburgh, and Dallas. Transaction details “We believe Aurora will be the first to commercialize self-driving technology at scale for the U.S. trucking and passenger transportation markets based on its industry-leading team, technology and partnerships,” said Mark Pincus, Co-Founder and Director of Reinvent Technology Partners Y. The special-purpose acquisition company (SPAC) is led by Pincus, Michael Thompson, and Reid Hoffman. Investors and Aurora partners have committed $1 billion in a private investment in public equity (PIPE), and the proposed transaction represents an equity…

Found in Robotics News & Content, with a score of 6.02

…is in travel, and robots have limitations in grasping, perception, and judgement.” “With zone-based each picking, FlexShelf optimizes order and batch picking to carts,” he added. “Humans aren't going anywhere anytime soon—for example, you might want a bathing suit and a surfboard at the same time.” “We still have to demonstrate the value of automation. Some of that value is in having orchestration software that optimizes both people and machines in an end-to-end process,” said Lawton. “We make it possible to program complex tasks with visual blocks and a drag-and-drop interface.” “Customers have been asking about ease of use and…

Found in Robotics News & Content, with a score of 5.94

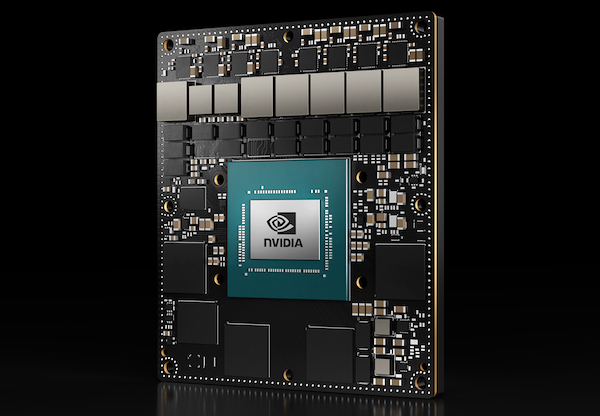

…to solve problems such as natural language understanding, 3D perception, and multisensor fusion. Jetson AGX Orin production modules. Source: NVIDIA NVIDIA to offer multiple modules The four Jetson Orin-based production modules, which NVIDIA announced at its GPU Technology Conference (GTC), are designed to offer customers a full range of server-class AI performance. The Jetson AGX Orin 32GB module is available to purchase now, while the 64GB version will be available in November. Two Orin NX production modules are coming later this year, said NVIDIA. The production systems are supported by the NVIDIA Jetson software stack, which has enabled thousands of…

Found in Robotics News & Content, with a score of 5.93

…said can add autonomy to any tractor using lidar perception algorithms. Horovitz will join Fieldin as chief autonomy afficer, and Reshef will be the company’s chief technology officer. “It’s not enough to have great agricultural data or great autonomous technology—you need to have both to make autonomous farming a reality,” noted Horovitz. “What’s so powerful about this merger is the potent combination of Fieldin’s unparalleled farming data collection, which includes over 49 million hours’ worth of tractor driving, with our driverless technology expertise.” “We’re excited to join forces with Fieldin because only together will we be able to help farmers…

Found in Robotics News & Content, with a score of 5.84

…Sim, developers can generate production-quality datasets to train AI perception models,” wrote Gerard Andrews, senior product marketing manager for robotics at NVIDIA, in a blog post. “Developers will also be able to simulate robotic navigation and manipulation, as well as build a test environment to validate robotics applications continually.” NVIDIA works to close 'reality gap' With its NVIDIA Omniverse applications, the Santa Clara, Calif.-based company has focused on ever more realistic simulation and digital twins to accelerate artificial intelligence for robots. “This follows our strategy for end-to-end robotics,” Andrews told Robotics 24/7. “We segment the intersection of robotics and AI…

Found in Robotics News & Content, with a score of 5.82

…most complex AI models in natural language understanding, 3D perception, and multi-sensor fusion, it claimed. To demonstrate the performance improvement, NVIDIA ran some computer vision benchmarks using the NVIDIA JetPack 5.1. Testing included some dense INT8 and FP16 pretrained models from NGC. The same models were also run for comparison on Jetson Xavier NX. The company ran the following benchmarks: NVIDIA PeopleNet v2.5 for the highest accuracy in people detection NVIDIA ActionRecognitionNet for 2D and 3D models NVIDIA LPRNet for license plate recognition NVIDIA DashCamNet for object detection and labeling NVIDIA BodyPoseNet for multiperson human pose estimation “Taking the geomean…