Editors’ Picks

Found in Robotics News & Content, with a score of 0.56

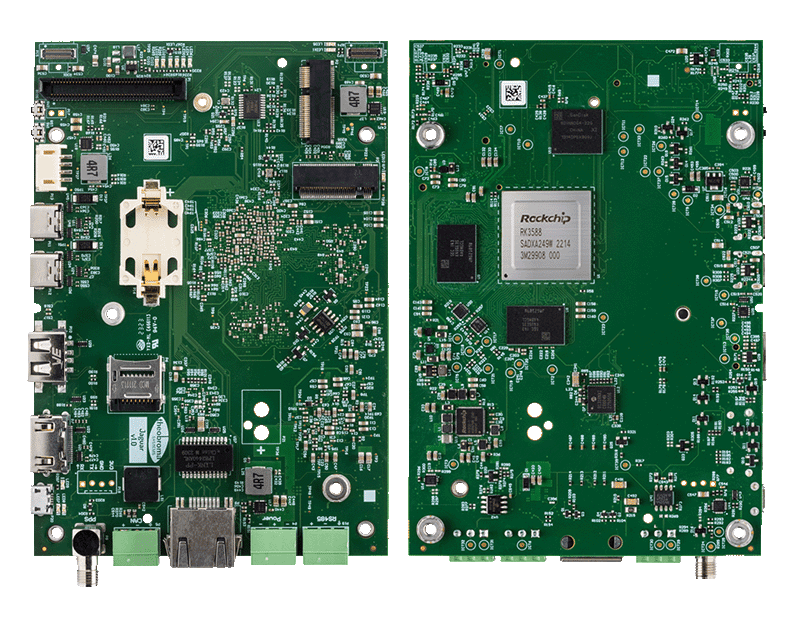

…and four ARM Cortex-A55 at 1.8 GHz, a Mali G610-GPU, and an NPU with up to 6 TOPS (trillions of operations per second). JAGUAR opens up a wide range of possibilities for AI and machine learning applications, Theobroma Systems said. The JAGUAR system is designed for robust AMRs. Click on image to enlarge. Source: Theobroma Systems System has multiple interfaces, single power supply Two SuperSpeed USB 3.1 interfaces enable high-resolution cameras, stereo cameras, or sensors such as 2D and 3D lidar, as well as ToF sensors to be connected. Four MIPI-CSI interfaces allow the cost-efficient connection of high-resolution cameras, said…

Found in Robotics News & Content, with a score of 1.74

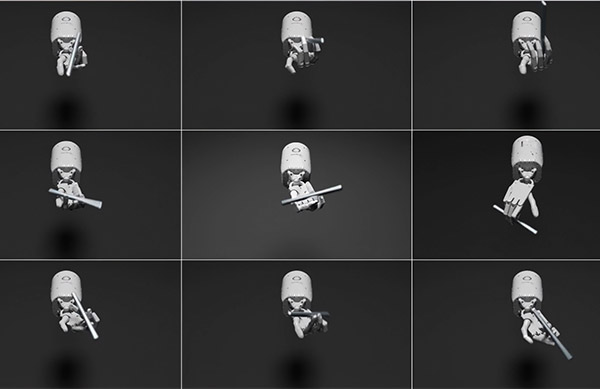

…a coding LLM. Then, it iterates between reward sampling, GPU-accelerated reward evaluation, and reward reflection to progressively improve its reward outputs. Click on image to enlarge. Source: NVIDIA Research Eureka AI trains robots Eureka-generated reward programs — which enable trial-and-error learning for robots — can outperform expert human-written ones on more than 80% of tasks, according to the paper. This leads to an average performance improvement of more than 50% for the bots, said NVIDIA. The AI agent taps the GPT-4 LLM and generative AI to write software code that rewards robots for reinforcement learning. It doesn’t require task-specific prompting…

Found in Robotics News & Content, with a score of 0.24

…it said. NVIDIA stated that its graphics processing unit (GPU)-accelerated GEMs enable visual odometry, depth perception, 3D scene reconstruction, and localization and planning. Robotics developers can use the tools to swiftly engineer systems for a range of applications, it said. “With an enhanced SDG [synthetic data generation], improved ROS support, and new sensor models for generative AI, Isaac accelerates AI for the ROS community,” said Talla. The latest Isaac ROS 2.0 release brings the platform to production-ready status, enabling developers to create and bring high-performance robots to market, explained the company. “ROS continues to grow and evolve to provide open-source…

Found in Robotics News & Content, with a score of 1.35

…Lovelace architecture enhancements in NVIDIA RTX graphics processing units (GPUs) with DLSS 3 technology fully integrated into the Omniverse RTX Renderer. A new AI de-noiser enables real-time 4K path tracing of massive industrial scenes. Native RTX-powered spatial integration — New extended-reality (XR) developer tools let users build spatial-computing options natively into their Omniverse-based applications. This gives users the flexibility to experience their 3D projects and virtual worlds however they like, NVIDIA said. These platform updates are showcased in Omniverse foundation applications, which are fully customizable reference applications that creators, enterprises, and developers can copy, extend, or enhance. Upgraded applications include:…

Found in Robotics News & Content, with a score of 0.70

…users and OEMs more easily integrate graphics processing unit (GPU) and lidar technology into self-driving vehicles. NVIDIA said DriveWorks is the foundation for AV software development and a trusted platform for creating and deploying applications. “Developers building on DriveWorks will be able to effectively integrate Hesai's lidar sensors into their vehicles, leading to more efficient and reliable autonomous driving systems.” “Simulation is a critical component to the sensor integration pipeline,” noted Hesai and NVIDIA. Built on Omniverse, NVIDIA DRIVE Sim provides a physically based virtual environment for validation and testing. With the ability to access Hesai lidar sensor models in…

Found in Robotics News & Content, with a score of 1.36

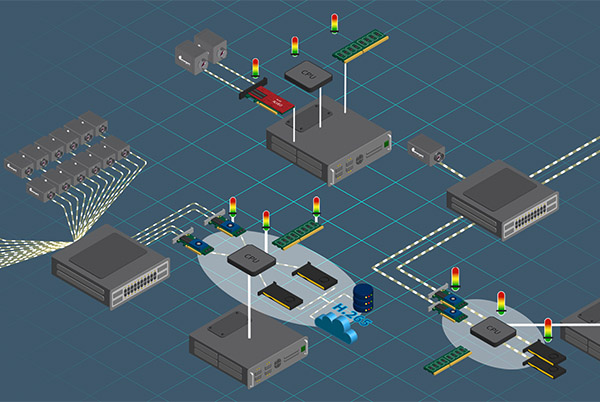

…delved into processing technologies, including NVIDIA graphics processing units (GPUs), field-programmable gate arrays (FPGAs), and Emergent’s award-winning software and proprietary network interface controllers (NICs). It also discussed how they unlock new capabilities for high-speed imaging, such as zero copy image transfer and GPUDirect. In addition, the company covered the adoption of 10GigE and beyond and presented new high-speed imaging demonstrations featuring 24- and 48-camera setups. They used eCapture Pro software and GPU Direct. “In high-speed imaging applications where multiple 10GigE, 25GigE, or 100GigE streams are used, real-time processing will require offload technologies to more suitable processing technologies than just system…

Found in Robotics News & Content, with a score of 0.43

…MIC-75GF10 expansion module is compatible with the SKY-MXM-A2000 MXM GPU card, said Advantech. This provides machine vision and autonomous navigation capabilities for various applications, including wafer box handling, material handling, robotic arm operations, and more. MIC-770 V3 also supports WISE-Edge365, which enables remote management for status monitoring and anomaly detection. With these features, the MIC-770 V3 can be deployed for various edge artificial intelligence of things (AIoT) applications as a data gateway or industrial controller, said Advantech. MOV.AI software is based on ROS MOV.AI provides software and a Web-based interface for building, deploying, and running intelligent robots. The MOV.AI Robotics…

Found in Robotics News & Content, with a score of 0.39

…power efficiency lower than a typical computing platform running GPUs by implementing NVIDIA Jetson is important for enabling us to do these kinds of missions,” said Towal. Oceanic surveying meets edge AI Saildrone relies on the NVIDIA JetPack SDK for access to a full development environment for hardware-accelerated edge AI on the Jetson platform. It runs machine learning on the module for image-based vessel detection to aid navigation, said NVIDIA. Saildrone pilots set waypoints and optimize the routes using “metocean” data—which includes meteorological and oceanographic information—returned from the vehicle. All of the USVs are monitored around the clock, and operators…

Found in Robotics News & Content, with a score of 0.69

…for AI Advantech works with NVIDIA graphics processing units (GPUs) in AGX Orin for integration with edge AI, explained Waldman. “We have five products based on NVIDIA Jetson for things like visual inspection and sensor fusion,” he said. “For instance, Advantech has partnered with Overview.ai, which has designed the plug-and-play OV20i AI vision system,” Waldman added. “We can show the ICAM-520 edge AI camera 20 parts, and it can identify what to inspect.” In addition, Advantech works with the second-generation Intel Xeon scalable processors. “We've also worked with AWS and Windows IoT on security,” said Waldman. Boards with built-in IoT…

Found in Robotics News & Content, with a score of 0.89

…trillion global data center infrastructure. From graphics processing units (GPUs) accelerating computers past CPU capabilities to large language models and ChatGPT, Huang said technology and industry are at a positive “tipping point.” Omniverse hosts simulations in real time “With generative AI, you could tell a robot what you want, and it could generate animations,” Huang claimed. He showed videos that took into account real-world physics and lighting and ran in simulation without any pre-programming. NVIDIA Omniverse connects computer-aided design (CAD) software, APIs, and frameworks for generative AI running on Microsoft's Azure cloud using real-time factory data and 5G networking, enabling…

Found in Robotics News & Content, with a score of 5.60

Before a robot can navigate, it needs to know where it is. The NVIDIA Isaac™ ROS Map Localization package provides a GPU-accelerated node to find the position of the robot relative to a lidar occupancy map. In this webinar, we’ll present how this package works, and how to use it for initial localization of your robot.

Found in Robotics News & Content, with a score of 0.75

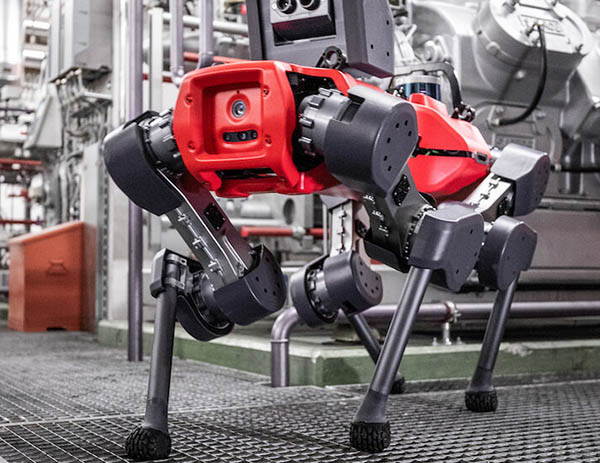

…NVIDIA, since it is taking advantage of chip maker's GPU to power the ANYmal. Investors see promise in ANYbotics The funding round was led by investors Walden Catalyst and NGP Capital with participation from Bessemer Venture Partners, Aramco Ventures, Swisscom Ventures, Swisscanto Private Equity, and other existing investors. “We are thrilled to invest in ANYbotics, a pioneering technology originated at ETH Zurich that combines AI and Reinforcement Learning with robotics to create highly robust and autonomous four-legged robots,” said Young Sohn, managing partner at Walden Catalyst. “This unique technology allows robots to be easily deployable in complex industrial environments, making…