About six or seven years ago, when Martijn Tideman first joined TASS International, convincing car makers to use simulation software was an uphill battle. “They’d tell me, ‘It would never work. We’ve been doing road testing for 30 years. That’s what we’ll continue to focus on,’” he recalled the conversations.

His current PowerPoint deck for sales calls still includes a series of slides that make the case for using simulation software. But these days, he seldom gets to use them. “Now, when I talk to prospective clients, they’d say, ‘Skip those slides. We know we need to do it. Just show us what you can do.’”

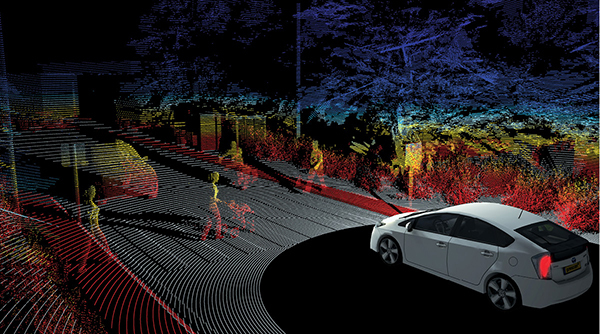

Among TASS International’s products is Simcenter Prescan, described as “a physics-based simulation platform for development of advanced driver assistance systems and automated driving systems that are based on sensor technologies such as radar, laser/lidar, camera, ultrasonic, DSRC [dedicated short-range communications] and GPS.”

Software such as Simcenter Prescan allows automakers to train their artificial intelligence programs to make the right decision not only in routine events but also in uncommon but imminent events. For example, how would the autonomous pilot react to an elephant crossing the road, or a group of trick-or-treaters dressed up as pumpkins?

_550.jpg)

VTD from MSC Software (part of Hexagon) is a virtual test driving software autonomous vehicle developers can use to train AI decision making. Image courtesy of Hexagon.

Most human drivers have acquired sufficient life experiences to deal with these incidents, but autonomous vehicles have not. To physically set up these unusual scenarios to train the AI pilot is quite daunting. In some cases, it may be downright dangerous for the participants. This is where virtual driving software offers engineers the option to build the event in pixels and repeat it until the AI has developed a good strategy to deal with it, be it a sauntering elephant or a bunch of two-legged pumpkins.

When an elephant

crosses the road

TASS International’s genesis went back to the Netherlands Organisation for Applied Scientific Research (TNO). In 2013, after consolidating five divisions of TNO, TASS International was created. In September 2017, manufacturing titan Siemens acquired TASS International, in a bid to bolster its offerings to autonomous car developers. TASS International products and services are now part of Siemens’ Simcenter portfolio.

TASS International’s products are: Simcenter Madymo, for restraint system design and occupant safety analysis; Simcenter Prescan, for virtual development and validation of automated driving systems; and Simcenter Tyre, for tire simulation and vehicle performance analysis.

GPU maker NVIDIA’s DRIVE Constellation is a two-server setup that lets you create a virtual driving experience. Image courtesy of NVIDIA.

Although most autonomous vehicle developers are concentrating on simulating and testing how the AI program handles routine events (lane changes, road construction signs, sudden stops and so on), Tideman feels it’s equally important to conceive and test the rare but imminent events.

“Unpredictable human behavior is difficult to model and adapt autonomous vehicles to anticipate human drivers!”

— DE 2018 survey response

“Statistically, some events only occur once in every 10,000 miles, and others once in every 100,000 miles,” Tideman says. “But that means if the car is on the road long enough, it will encounter them.”

On a normal day in New York, having to stop for an elephant crossing the road is highly improbable, but if a circus is in town, the likelihood increases. It increases more if the location changes to rural parts of Southeast Asia, where people and beasts share the land and the roads.

Then there are also combinations of factors that are unlikely to occur, but still within the realm of possibility. Most engineers would test and train the AI to drive in a blizzard, deal with faded lane markers, or react to the sudden appearance of a pedestrian. But are they testing how the AI performs when it’s driving through a blizzard on a road with faded lane markers, and a pedestrian suddenly dashes across the street?

Training the AI requires repeating variations of the same event over and over—for example, the same scenario above playing out in different degrees of visibility. “Without simulation, you can’t address even a fraction of these cases,” points out S. Ravi Shankar, global director of simulation, Siemens PLM Software.

When the pumpkins take a stroll

Some engineers think regulatory measures hamper innovation and creativity, but, in self-driving vehicle development, Luca Castignani, autonomous driving strategist at MSC Software, thinks they should welcome them. “Regulations should set an open standard for the industry,” he reasons. “Without an open standard to define the roads, sensors and the scenarios, it’s extremely difficult for autonomous driving companies to work with suppliers or the government to develop or validate the autonomous system.”

Castignani has given several talks on simulation-based autonomous car development. “The reality is, we’re entering uncharted territories, a new continent we didn’t plan to uncover,” he says.

Known for its simulation software, MSC is now part of Hexagon, a Sweden-headquartered company with offerings for the manufacturing, automotive and infrastructure industries, among others. The acquisitions of MSC Software and VIRES VTD in 2017, and AutonomouStuff this year complement Hexagon’s domain expertise in metrology sensors, GPS software, smart city and positioning intelligence software. The company believes its strategy and product line offer an edge in autonomous driving simulation and testing fields.

More DE 2018 survey respondents chose simulation (46%) as the technology that will have the biggest impact on product design and development over the next five years than any other technology.

VTD (Virtual Test Drive), originally developed by VIRES (pronounced vee-res), is described as “an open platform for the creation, configuration, presentation and evaluation of virtual environments for autonomous driving validation.” It complements other offerings such as MSC Adams, a multibody simulation package that engineers can use to model and simulate the mechanical behaviors of cars.

In a program like VTD, you can set up the environment, the agents and the circumstances. This gives you the chance to test out scenarios that are difficult to reproduce. “Suppose you want to know how the car will behave when the city decides to paint all the road signs in yellow instead of white? Or what happens when the trees planted today grow to a size that prevents the driver from seeing the pedestrians?” Castignani asks.

With simulation, it’s also possible to create outlier scenarios for testing. “Think of workers carrying a large mirror and crossing the street. Think of children dressed up as pumpkins, out for a walk on Halloween,” Castignani says. “I don’t think many of these scenarios have been taken into consideration, but those are realities.”

It’s not impossible to test these scenarios in real life, but to create these experiments and repeat them many times is costly, time-consuming and, in some cases, risky for the actors. These are, Castignani points out, better suited for virtual roads with virtual pedestrians.

Each car runs on its own constellation

Autonomous vehicle development has become a regular topic at graphics processing unit (GPU) maker NVIDIA’s annual GPU Technology Conference. In 2018, NVIDIA CEO Jen-Hsun Huang highlighted the latest addition to its lineup of autonomous car-related offerings: DRIVE Constellation.

DRIVE Constellation is a data center solution that combines hardware and software packed into two servers. The first contains an array of GPUs, and runs DRIVE Sim software, which recreates data streams from a virtual car’s sensors: cameras, radars and lidars. Those data streams are then fed into the second server that contains a DRIVE AGX Pegasus, the AI car computer powering many of the industry’s self-driving cars. Think of each DRIVE Constellation setup as the equivalent of one virtual vehicle on the road.

“You can import worlds, roads, vehicle models, and scenarios into DRIVE Constellation, to test and validate AV hardware and software under a wide range of conditions,” Danny Shapiro, senior director for automotive, NVIDIA, says. “The simulation is happening in real time; the GPUs are generating the sensor outputs and the DRIVE AGX Pegasus is processing data and giving actuation commands as if the car were really on the road. Constellation enables true hardware-in-the-loop testing before putting vehicles on the road.”

A benefit of this type of setup is the ability to repeat the target scenario with various parameters—to conduct reruns—and cover the full scope of the problems the AI will likely encounter. “Suppose you’re concerned with how a car will deal with tough conditions at sunrise or sunset, when real drivers have said they tend to be blinded by the light. If you were to do the test in real life, you will only be able to do this twice a day during that precise moment,” Shapiro says. “But with simulation, you can spend all day testing blinding conditions, with all different types of traffic scenarios and all types of weather conditions. The flexibility and scalability of DRIVE Constellation enables developers to create safer self-driving systems.”

World building

Acquiring digital twins of real-world cities is an ongoing task, currently done by road scanning firms like Atlatec, data giants like Google and autonomous car developers themselves. Hexagon’s product line includes the Leica Pegasus mapping platform. With connection to VTD, the product lets engineers speed up road digitization. In one project, geospatial consultant Transcend Spatial Solutions used the Pegasus system to map and digitize the span of San Francisco’s Golden Gate Bridge (“Demystifying Mobile Mapping,” xyHt, June 2016, xyht.com).

TASS International’s parent company Siemens has a partnership with Bentley Systems, known for architecture and infrastructure software. “We can import data from Bentley Systems and automatically generate a full 3D city map within Simcenter Prescan as a basis for virtually testing an automated vehicle,” Tideman says.

The ability to import existing 3D models of cities into driving simulators could speed up the environment creation process, but the lack of these tools is not necessarily a hindrance. “It’s not essential to model New York City in great details to enable your autonomous car to be able to drive in it. You just have to teach your car to deal with a wide range of scenarios that could occur in New York,” says Tideman.

Similarly, Shapiro adds, “You don’t necessarily have to map every single road as they exist. You can use simulation to train the car to recognize stop signs, traffic lights, lane markings and so on. That way, your car can handle a road that it may have never seen before.”

Take it slow

The increased number of tech giants and leading automakers jumping into the autonomous vehicle market gives the impression that, if you’re late to the game, you could miss out. Consequently, many may feel pressured to rush a product out before sufficient testing has been done. However, statistics on consumer attitudes suggest adoption will not happen overnight (see “Americans’ attitudes toward driverless vehicles” by the Pew Research Center); therefore, there’s plenty of time left to devote to R&D for safety testing.

About the Author

Follow Robotics 24/7 on Linkedin

Article topics

Email Sign Up