NVIDIA Corp. is known for supporting simulation and artificial intelligence, and it has been touting its advances in robotics and machine learning. At the Conference on Robot Learning, or CoRL, next month, the NVIDIA Robotics Research Lab will present its latest findings. The company also described how scientists and engineers are collaborating to use NVIDIA Jetson in undersea research platforms.

The Seattle-based NVIDIA Robotics Research Lab focuses on robot manipulation, perception and physics-based simulation. It is part of NVIDIA Research, which includes more than 300 people around the world researching topics such as AI, computer graphics, computer vision, and autonomous vehicles.

The company said its team will present 15 papers at CoRL, which will be from Nov. 6 to 9 in Atlanta. Many of them will describe simulation-to-real findings that enable imitation learning, perception, segmentation, and tracking in robotics.

NVIDIA to share advances in robot learning

For contact-rich manipulation tasks using imitation learning, NVIDIA said its researchers have developed novel methods to scale data generation in simulation. They have also developed a human-in-the-loop imitation-learning method to accelerate data collection and improve accuracy, it noted.

The company also used large language models for tasks as diverse as traffic simulation and language-guided task description to predict grasping poses. It listed the following papers to be featured at CoRL:

- “Shelving, Stacking, Hanging: Relational Pose Diffusion for Multi-Modal Rearrangement:” This paper covers a system for repositioning objects in scenes trained on 3D point-cloud data. “The approach excels in various rearrangement tasks, demonstrating its adaptability and precision in both simulation and the real world,” said NVIDIA.

- “Imitating Task and Motion Planning With Visuomotor Transformers:” This paper covers a novel system that NVIDIA said uses large-scale datasets generated by task and motion planning (TAMP) supervisors and flexible transformer models to excel in diverse robot manipulation tasks.

- “Human-in-the-Loop Task and Motion Planning for Imitation Learning:” This paper covers the employment of a TAMP-gated control mechanism to optimize data collection efficiency, demonstrating superior results in comparison with conventional teleoperation systems, NVIDIA asserted.

- “MimicGen: A Data Generation System for Scalable Robot Learning Using Human Demonstrations:” MimicGen automatically generates large-scale robot training datasets by adapting a small number of human demonstrations to various contexts. Using around 200 human demonstrations, it created over 50,000 diverse task scenarios, enabling effective training of robot agents for high-precision and long-horizon tasks.

- “M2T2: Multi-Task Masked Transformer for Object-Centric Pick-and-Place:” This paper covers how a transformer model can pick up and place arbitrary objects in cluttered scenes with zero-shot sim2real transfer, outperforming task-specific systems by up to 37.5% in challenging scenarios and filling the gap between high- and low-level robotic tasks.

- “STOW: Discrete-Frame Segmentation and Tracking of Unseen Objects for Warehouse Picking Robots:” In dynamic industrial robotic contexts, segmenting and tracking unseen object instances with substantial temporal frame gaps can be challenging, said NVIDIA. The method featured in this paper introduces synthetic and real-world datasets and a novel approach for joint segmentation and tracking using a transformer module.

- “Composable Part-Based Manipulation:” This paper covers composable part-based manipulation (CPM), which uses object-part decomposition and part-part correspondences to enhance robotic manipulation skill learning and generalization. It has demonstrated its effectiveness in simulated and real-world scenarios, NVIDIA explained.

NVIDIA added that its researchers have both the second and third most-cited papers from CoRL 2021 and the most-cited paper from CoRL 2022.

One of the papers to be presented as an oral presentation at this year’s conference is a follow-up to those prior findings—“RVT: Robotic View Transformer for 3D Object Manipulation” (see video below).

NVIDIA Robotics Research Lab also noted that its members this year wrote a paper on “Language-Guided Traffic Simulation via Scene-Level Diffusion.”

NVIDIA Jetson Orin supports Woods Hole, MIT robot development

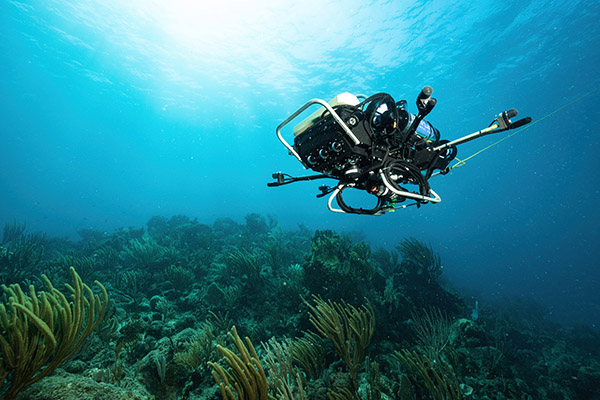

“Researchers are taking deep learning for a deep dive, literally,” said Scott Martin, senior writer at NVIDIA, in a blog post. The Woods Hole Oceanographic Institution (WHOI) Autonomous Robotics and Perception Laboratory (WARPLab) and the Massachusetts Institute of Technology are developing a robot to study coral reefs and their ecosystems.

Some 25% of coral reefs worldwide have vanished in the past three decades, and most of the remaining reefs are heading for extinction, according to the WHOI Reef Solutions Initiative.

The new WARPLab autonomous underwater vehicle (AUV) is part of an effort to turn the tide on reef declines, said WHOI, which claimed to be the world's largest private ocean research institution. The AUV uses an NVIDIA Jetson Orin NX module.

CUREE (Curious Underwater Robot for Ecosystem Exploration) gathers visual, audio, and other environmental data alongside divers to help understand the human impact on sea life. The robot runs an expanding collection of NVIDIA Jetson-enabled edge AI to build 3D models of reefs and to track creatures and plant life. It also runs models to navigate and collect data autonomously.

WHOI said it is developing CUREE to gather data, scale the effort, and aid in mitigation strategies. The organization is also exploring the use of simulation and digital twins to better replicate reef conditions and investigate systems such as NVIDIA Omniverse, a platform for building and connecting 3D tools and applications.

Creating a digital twin of Earth in Omniverse, NVIDIA is developing the world’s most powerful AI supercomputer for predicting climate change, called Earth-2.

DeepSeeColor model helps underwater perception

“Seeing underwater isn’t as clear as seeing on land,” noted Martin. “Over distance, water attenuates the visible spectrum of light from the sun underwater, muting some colors more than others. At the same time, particles in the water create a hazy view, known as backscatter.”

A team from WARPLab recently published a research paper on undersea vision correction that helps mitigate these problems. It describes the DeepSeeColor model, which uses two convolutional neural networks to reduce backscatter and correct colors in real time on the NVIDIA Jetson Orin NX while undersea.

“NVIDIA GPUs are involved in a large portion of our pipeline because, basically, when the images come in, we use DeepSeeColor to color correct them, and then we can do the fish detection and transmit that to a scientist up at the surface on a boat,” said Stewart Jamieson, a robotics Ph.D. candidate at MIT and an AI developer at WARPLab.

CUREE cameras detect fish and reefs

CUREE includes four forward-facing cameras, four hydrophones for underwater audio capture, depth sensors and inertial measurement unit sensors. GPS doesn’t work underwater, so it is only used to initialize the robot’s starting position while on the surface.

These sensors and the AI models running on Jetson Orin NX enable CUREE to collect data for 3D models of reefs and undersea terrains. To use the hydrophones for audio data collection, CUREE needs to drift with its motor off to avoid audio interference, said the researchers.

“It can build a spatial soundscape map of the reef, using sounds produced by different animals,” said Yogesh Girdhar, an associate scientist at WHOI, who leads WARPLab. “We currently [in post-processing] detect where all the chatter associated with bioactivity hotspots,” especially from snapping shrimp, he said.

Despite few underwater datasets in existence, pioneering fish detection and tracking is going well, said Levi Cai, a Ph.D. candidate in the MIT-WHOI joint program. He said they’re taking a semi-supervised approach to the marine animal tracking problem.

The tracking starts with targets detected by a fish detection neural network trained on open-source datasets for fish detection, which is fine-tuned with transfer learning from images gathered by CUREE.

“We manually drive the vehicle until we see an animal that we want to track, and then we click on it and have the semi-supervised tracker take over from there,” said Cai.

Jetson Orin saves energy for CUREE

Energy efficiency is critical for small AUVs like CUREE, said NVIDIA. The compute requirements for data collection consume about 25% of the available energy resources, with driving the robots taking the remainder.

CUREE typically operates for as long as two hours on a charge, depending on the mission, said Girdhar, who goes on the dive missions in St. John in the U.S. Virgin Islands.

To enhance energy efficiency, the team is looking into AI for managing the sensors so that computing resources automatically stay awake while making observations and sleep when not in use.

“Our robot is small, so the amount of energy spent on GPU computing actually matters — with Jetson Orin NX, our power issues are gone, and it has made our system much more robust,” Girdhar said.

Isaac Sim could enable improvements

The WARPLab team is experimenting with NVIDIA Isaac Sim, a robotics simulation and synthetic data generation tool powered by Omniverse, to accelerate development of autonomy and observation for CUREE.

Its goal is to do simple simulations in Isaac Sim to get the core essence of the problem and then finish the training in the real world, said Yogesh.

“In a coral reef environment, we cannot depend on sonars — we need to get up really close,” he said. “Our goal is to observe different ecosystems and processes happening.”

WARPLab strives for understanding, mitigation

The WARPLab team said it intends to make the CUREE platform available for others to understand the impact humans are having on undersea environments and to help create mitigation strategies.

The researchers plan to learn from patterns that emerge from the data collected almost fully autonomously by CUREE. “A scientist gets way more out of this than if the task had to be done manually, driving it around staring at a screen all day,” Jamieson said.

Girdhar said that ecosystems like coral reefs can be modeled with a network, with different nodes corresponding to different species and habitat types. The researchers are seeking to understand this network to learn about the relationships among various animals and their habitats, he said.

The data collected by CUREE AUVs could help people understand how ecosystems are affected by harbors, pesticide runoff, carbon emissions, and dive tourism, said Girdhar.

“We can then better design and deploy interventions and determine, for example, if we planted new corals how they would change the reef over time,” he said.

Article topics

Email Sign Up