As robotics suppliers work to expand automation to new tasks and markets, their systems need to be easier to train and more flexible. Micropsi Industries has developed MIRAI, a control system that it said enables collaborative robots to perceive and react rather than follow preset programs.

Micropsi was founded in 2014 and has offices in Brooklyn, N.Y., and Berlin, Germany. The company is aiming to serve in assembly and test applications for electronics, consumer goods, and other manufacturing.

“I have a background in computer science and philosophy, and I got into robotics via artificial intelligence,” said Ronnie Vuine, founder and CEO of Micropsi Industries. “I'm not a mechatronics person. I came to AI for robotics by looking for applications where AI could learn from people what to do in the real world. I realized nobody in industrial robotics was doing it.”

Micropsi obtained funding in 2018 and its first commercial deployments are now going live, he told Robotics 24/7.

Robot learning in real time

How is MIRAI different from other demonstration-based training for cobots such as those from Universal Robots or Rethink Robotics? “Flexibility,” Vuine replied. “For example, in traditional robotics, if the task was to plug a cable into an iPhone, we would have gotten a 3D camera, built a point cloud model of the phone, and then given it a single movement directive.”

“But if it was off by a half a degree, you'd be in trouble. It was mostly about precision and measuring,” he said. “Humans are better at this, but they don't know exactly how they do it. Instead of measuring, we make small corrective movements, quickly and frequently. Instead of consciously establishing where everything is and then determining where to go, we can plug it in reliably.”

“Our product has 20 or more hertz of real-time control without measurements guiding the robot,” claimed Vuine. “Unlike the cobot makers, which teach points, and the robot interpolates between them, operators can train it.”

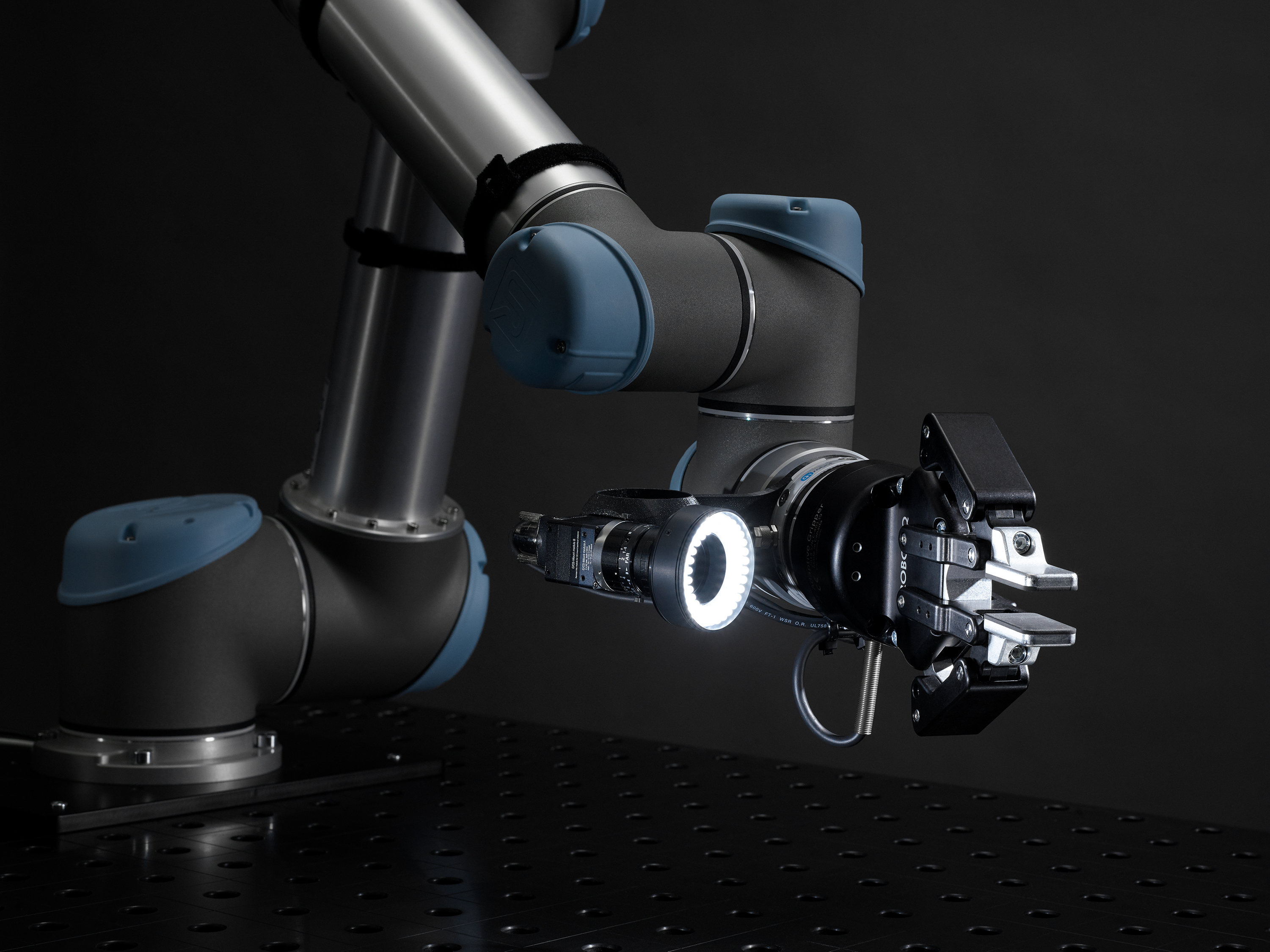

“MIRAI sees with cameras,” he explained. “There were many problems to solve, such as in machine tending, where a cobot doesn't know exactly where things are. Then there were the machine vision challenges, such as with lighting. Figuring out where to put a camera takes a bit of engineering before you start training.”

“Any time you're dealing with variance in machine position, color, or place, the user can train MIRAI to understand what images mean and how to behave,” Vuine said. “Just connect camera to the robot's wrist and guide the arm to points. It watches and generalizes what it has been shown and knows where to go. Our system allows the robot to have intuition, and it can solve previously unsolvable problems.”

Use cases for MIRAI

Vuine cited an example of “fridge sniffing,” when refrigerator manufacturers inspect compressors and copper tubes for coolant leaks. “The soldering looks very different, depending on who does it an when,” he said. “To make sure the tubes are airtight, you bring a probe up to 1 mm distance. How to do that with a robot when the joints may be in different places each time?”

“We solved it using MIRAI by guiding the robot to where the solder joint was,” Vuine said. “It was doing it as well as humans, and the application is now in two plants in the U.S.”

At the same time, Micropsi's system is intended to be as general-purpose as possible, he added. “It's extremely important for us to have no clue what the user is doing,” Vuine said. “We didn't develop MIRAI to solve a specific application. We want to be very generic, using the power of machine learning to solve problems.”

“For example, in Germany, there are machines that make cloth that goes around a mattress,” he said. “There are four women who know exactly how to slide the needles in—we couldn't tell them how they do it, and the hardware didn't exist. Cognex's system could pick up stuff and insert it somewhere, but they were more comfortable training ours.”

How long does it take to train MIRAI? “For simple problems, where you need to find something visually and there are no complex movements, like finding something on a table whose properties the robot doesn't know, it can take 40 demonstrations to get it in half an hour,” Vuine said. “If you vary the locations of objects on a table, then wait for 3.5 hours, the skill comes back from the cloud, and then the robot can get things done.”

“The fridge application was tricker,” he acknowledged. “There was high variance, and sometimes had a case where one cared about position and trajectory around copper tubes. It took about 600 to 700 demonstrations—about a week or so—but it was a high-value application.”

“The zone of possibility has surprised people,” said Vuine. “Whether it's fridges, picking wine glasses, or following a contour to find the edge of a cable or table.”

Partnerships and support

Micropsi has worked with partners including NVIDIA, Universal Robots, and ABB. “NVIDIA was helpful when setting up our product, since GPUs [graphics processing units] are integral to any AI application,” said Vuine.

“We have close relationships with the robot guys,” he noted. “Our incentives are perfectly aligned—we allow them to enlarge the total addressible market. Variance for end effectors is usually a barrier to adoption. They also bring interesting use cases for MIRAI, such as plugging in cables or gear picking.”

“We also have a distribution network in Germany and the U.S.,” Vuine added. “In terms of robot platforms, there are some obvious manufacturers to work with next. Our customers like cobots, and we can support any machine with MIRAI's real-time interface.”

MIRAI works with any camera, including webcams, said Vuine. “We will expand the range of supported cameras,” he said. “If you buy our product to day, you'll get our controller, some add-ons for mounting and a tablet, and some support.”

About the Author

Follow Robotics 24/7 on Linkedin

Article topics

Email Sign Up