Eureka! NVIDIA Corp. today said it has developed a new artificial intellience agent that can teach robots complex skills. The Santa Clara, Calif.-based company said that Eureka has trained a robotic hand to perform rapid pen-spinning tricks as fast as a human can.

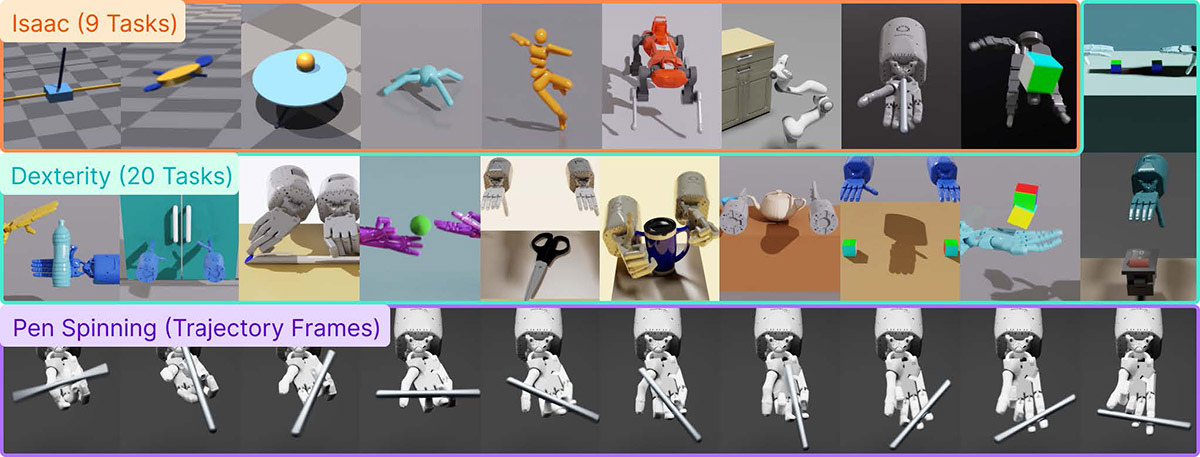

The prestidigitation, shown in the video above, is one of nearly 30 tasks that Eureka has taught robots by autonomously writing reward algorithms. Eureka has also taught robots to open drawers and cabinets, toss and catch balls, and manipulate scissors, among other tasks.

NVIDIA Research today published the Eureka library of AI algorithms, so people can experiment with them using NVIDIA Isaac Gym, a physics simulation reference application for reinforcement learning research.

Isaac Gym is built on NVIDIA Omniverse, a development platform for building 3D tools and applications based on the OpenUSD framework. Eureka itself is powered by the GPT-4 large language model.

“Reinforcement learning has enabled impressive wins over the last decade, yet many challenges still exist, such as reward design, which remains a trial-and-error process,” said Anima Anandkumar, senior director of AI research at NVIDIA and an author of the Eureka paper. “Eureka is a first step toward developing new algorithms that integrate generative and reinforcement learning methods to solve hard tasks.”

Eureka AI trains robots

Eureka-generated reward programs — which enable trial-and-error learning for robots — can outperform expert human-written ones on more than 80% of tasks, according to the paper. This leads to an average performance improvement of more than 50% for the bots, said NVIDIA.

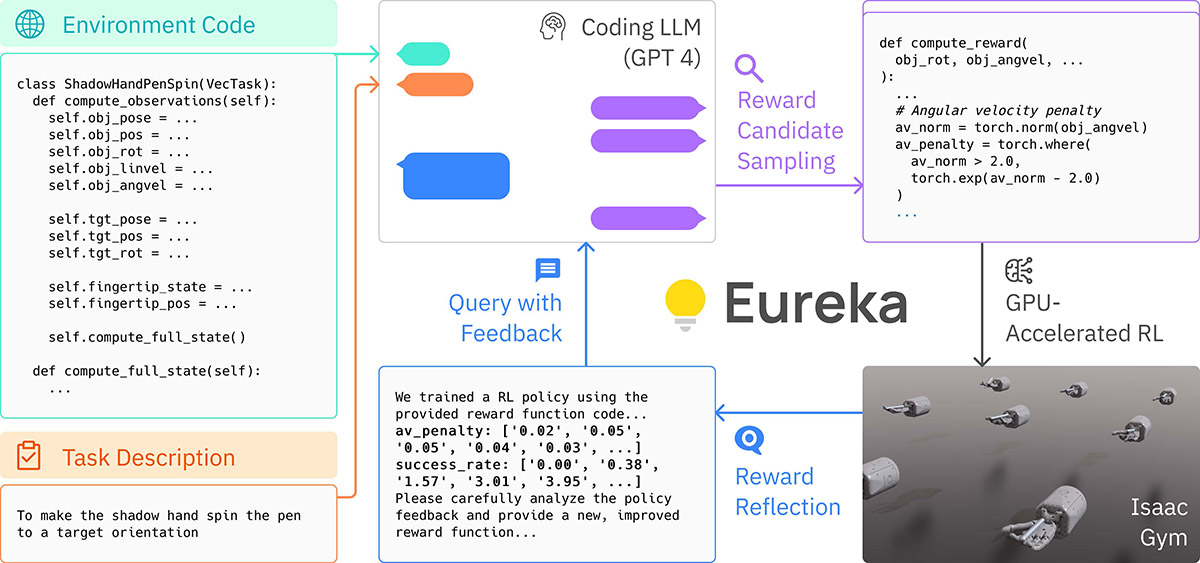

The AI agent taps the GPT-4 LLM and generative AI to write software code that rewards robots for reinforcement learning. It doesn’t require task-specific prompting or predefined reward templates — and it readily incorporates human feedback to modify its rewards for results more accurately aligned with a developer’s vision.

Using GPU-accelerated simulation in Isaac Gym, Eureka can quickly evaluate the quality of large batches of reward candidates for more efficient training.

Eureka then constructs a summary of the key stats from the training results and instructs the LLM to improve its generation of reward functions. In this way, the AI is self-improving. It has also taught all kinds of robots — quadruped, bipedal, quadrotor, dexterous hands, collaborative robot arms, and others — to accomplish all kinds of tasks.

Researchers evaluated results

The research paper evaluates 20 Eureka-trained tasks, based on open-source dexterity benchmarks that require robotic hands to demonstrate a wide range of complex manipulation skills.

The results from nine Isaac Gym environments are showcased in visualizations generated using NVIDIA Omniverse.

“Eureka is a unique combination of large language models and NVIDIA GPU-accelerated simulation technologies,” said Jim Fan, senior research scientist at NVIDIA, who is one of the project’s contributors. “We believe that Eureka will enable dexterous robot control and provide a new way to produce physically realistic animations for artists.”

The LLM research followed recent NVIDIA Research advancements such as Voyager, an AI agent built with GPT-4 that can autonomously play Minecraft.

NVIDIA Research includes hundreds of scientists and engineers worldwide, with teams focused on topics such as AI, computer graphics, computer vision, self-driving cars, and robotics.

About the author

Angie Lee is corporate communications manager at NVIDIA. This blog post is republished with permission.

Article topics

Email Sign Up