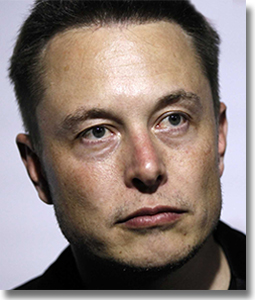

Tesla CEO Elon Musk has never been particularly shy about his fear of killer robots, but now he seems to be doing something about it.

Friday, Musk and a group of partners announced the formation of OpenAI, a nonprofit venture devoted to open-source research into artificial intelligence.

Musk is co-chairing the project with Y Combinator CEO Sam Altman, and a number of powerful Silicon Valley players and groups are contributing funding to the project, including Peter Thiel, Jessica Livingston, and Amazon Web Services.

Altman described the open-source nature as a way of hedging humanity’s bets against a single centralized artificial intelligence.

“Just like humans protect against Dr. Evil by the fact that most humans are good, and the collective force of humanity can contain the bad elements, we think its far more likely that many, many AIs, will work to stop the occasional bad actors than the idea that there is a single AI a billion times more powerful than anything else,” Altman said in a joint interview with Backchannel.

“If that one thing goes off the rails or if Dr. Evil gets that one thing and there is nothing to counteract it, then we’re really in a bad place”

The institute’s own announcement takes a somewhat more measured tone. “Because of AI’s surprising history, it’s hard to predict when human-level AI might come within reach,” it reads. “When it does, it’ll be important to have a leading research institution which can prioritize a good outcome for all over its own self-interest.”

Much of the same logic applies to corporate centralization. Google, Facebook and Microsoft all have active research labs exploring machine learning and artificial intelligence techniques, and while all three regularly publish their findings as an open source, competitors at OpenAI worry that flow of research will close off as the findings become more economically valuable.

OpenAI looks to prevent that slowdown with openly published findings, similar to academic AI research and private foundations like the Allen Institute.

Musk has been outspoken about the dangers of artificial intelligence in the past and funded research on that basis.

It’s unclear how much OpenAI will focus on those issues, although Musk said those aspects would be of particular interest to him. “I’m going to be super-conscious personally of safety,” Musk told Backchannel.

“This is something that I am quite concerned about. And if we do see something that we think is potentially a safety risk, we will want to make that public.”

Source: The Verge

ZDNet Special Feature: AI and the Future of Business

Related Video: How Smart Is Today’s Artificial Intelligence?

Article topics

Email Sign Up