Although industrial automation has been used in food processing for decades, produce handling has been difficult for robots to do. Not only are fruits and vegetables variable in size and shape, but they must also be handled delicately to avoid bruising. Scientists have recently made progress in developing tactile sensors to enable robots to approach or surpass human-level motor skills and meet growing industry needs.

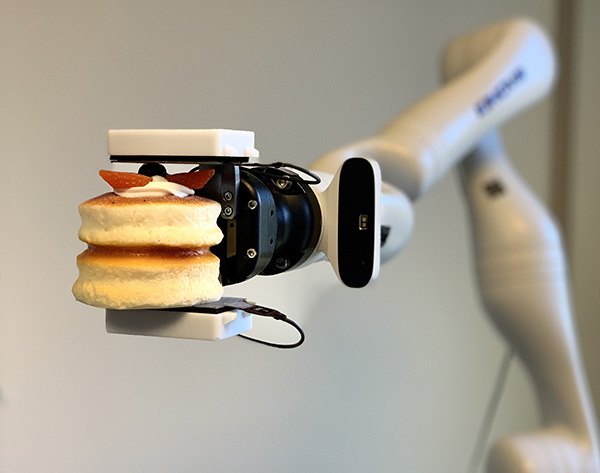

Researchers at Mitsubishi Electric Research Laboratories (MERL) have been working with the Department of Brain and Cognitive Sciences at the Massachusetts Institute of Technology on a prototype collaborative robot with tactile sensor technology. The ability to grasp, lift, and place food items without damaging them also has implications for manufacturing, healthcare and pharmaceuticals, and ultimately home robots, according to MERL.

Robotics 24/7 recently spoke with Daniel Nikovski, manager of the data analytics group; Allan Sullivan, group manager of the computer vision group; and Anthony Vetro, vice president at MERL. Each of them has years of experience in electronics, machine learning, and robotics. They explained their work in improving robotic perception for manipulation.

Augmenting visual perception

“Our origins were in motors and linkages, and it was a natural progression to the robotics space,” said Sullivan. “We were working on computer vision, and as we try to move to more diverse applications, we'd like to have robots manipulate objects in a more humanlike fashion. For example, robots need the capability to do complex things, like blind grasping in a high cabinet, which humans can do with their sense of touch. We can feel the pose, adjust grasp, and adjust for slipping better than robots.”

Some cobots have cameras mounted near the end-of-arm tooling (EOAT) or even inside the grippers. Why not just rely on vision?

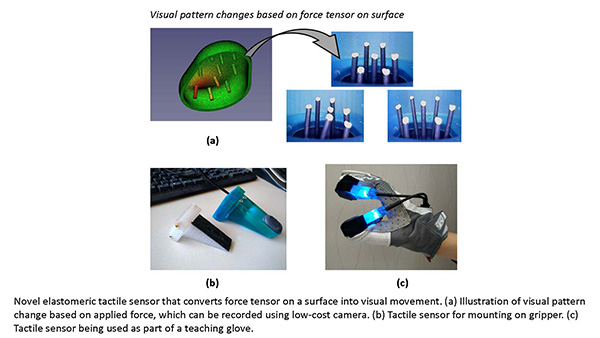

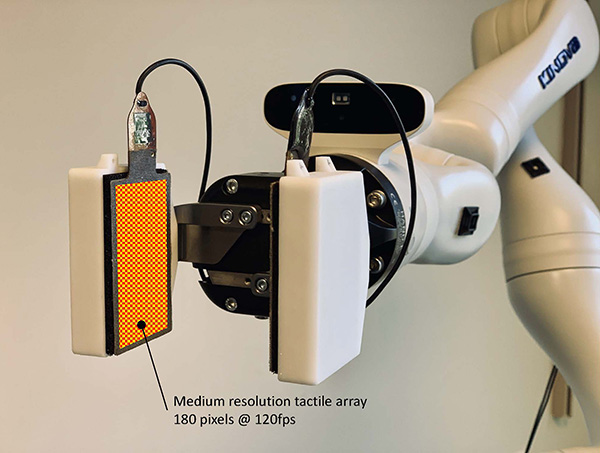

“Putting a camera inside the gripper takes a whole computer to calculate—simpler is always better,” Sullivan replied. “To train spatial sense to be similar to vision, we realized that we needed to augment visual perception with tactile. This involves both our computer vision and data analytics groups.”

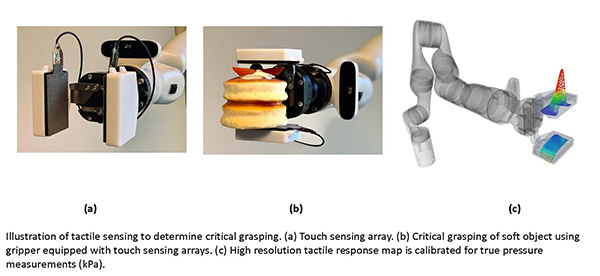

“We've been using a range of sensors,” said Vetro. “Alan's group was using pressure sensors, and Daniel's group was using sensors with MIT. We've seen cameras to look at depth deformations inside soft grippers.”

“From a machine learning point of view, when we develop advanced robotics applications, we need to process a lot of sensory data,” added Nikovski. “Proprioceptive sensing at the wrist is used in all types of applications that require contact. This affects Mitsubishi's business in a big way. Without contact, third-generation pick-and-place operations require hard programming and a teach pendant.”

MERL applies machine learning

MERL's research includes the use of machine learning to reduce the cost of deployment as well as to improve robotic manipulation, said Nikovski.

“As soon as you need to react to sensory data in real time, it becomes complicated and expensive,” he said. “While the cost of robotics hardware has gone down dramatically—from $1 million to that of a car—programming is still ridiculously expensive. The market won't grow until we reduce that hurdle.”

“There has been a lot of excitement recently about reinforcement learning, with things like AlphaGo,” Nikovski said. “We had some success applying it to robotics and had a demonstration at CEATEC in Japan two years ago with different manipulators. Most actual applications that our customers care about can't be solved through trial and error.”

“Another type of machine learning is learning from demonstration,” he explained. “This is where cobots come in. In the future, the fastest, most natural way to teach robots to do something is kinesthetic. The challenge is the ability to generalize. Replicating one example is easy, but what if the parts are in different positions?”

“We'd like to use AI to intepret rich sensor signals and enable the robot to learn from cameras, build physical models of contact manipulation, then use that to design force controllers at the wrist and fingers,” said Nikovski.

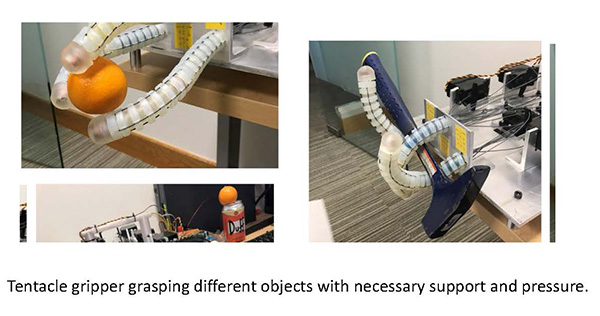

Soft manipulators and mental models

“Soft manipulators are good when you don't care about the final pose of the object, but you need to arrange things in a particular way,” says Sullivan. “For example, you may want to arrange food in an aesthetic way in a box. Other grippers solve a problem for picking, but you can do other things with tactile sensing.”

“Take, for example, in-grasp manipulation like picking up a screw, which we can rotate in the hand,” he said. “For circuit-board assembly, you'd use a very specialized robot, but humans can thread it through a hole partly based on touch. We look with our eyes and close the loop with touch. Breaking down manual processes for such tedious jobs is a gigantic niche for automation.”

Sullivan noted that MERL does not rely solely on grasping simulation. “The gap between that and physical systems, in terms of appearance and dynamics, it doesn't really work,” he said. “We tried to match and vary parameters so they span normal, and a computer can do it 100 times faster, reset instantly, and in a massively parallel way. It is more flexible if the controller learns in simulation, then we do fine-tuning, and it works directly on a robot.”

“People observe, make mental models, then execute in the real world,” said Sullivan. “This can also happen in robotics. How do we extract a model from perceptions and build it in the computer for the robot to execute?”

“There's a mathematical difference between tactile and visual modeling,” Nikovski said. “The first question that one of our Japanese collaborators asked was, 'How much data do you need?' As little as possible. That's another difference between computers and humans. Deep learning requires tons of data, and reinforcement learning requires lots of examples. The computer extracts very little from each data point. In most use cases, learning starts from scratch, but humans learn to parse tasks into elementary operations.”

“Robots do not understand this at all,” he continued. “A human worker sees a demonstration of parts being inserted into other parts and doesn't need to feel how hard they are. Thanks to object recognition, we have known affordances and can automatically adjust for steel versus plastic.”

“The only way for robots to move forward is to build libraries of skills and determine what the function is like,” said Nikovski. “To achieve one-shot learning is a holy grail. There are technologies that allow learning a single trajectory that allow for starting state and goal location with simple dynamic moves and primitives.”

MERL trains robots with in-house data

Part of the challenge of making cobots more dexterous is the amount of data to process from sensors or needed for training. MERL is using its own machine learning data rather than publicly available libraries, said Nikovski.

“For force-torque data, it depends on the specific configuration,” he said. “In the field of computer vision—this is state of the art—we use existing networks and a form of transfer learning.”

“We work a lot outside of robotics, and we use existing data sets in those spaces,” said Sullivan. “We start with a pretrained network and fine-tune the back end. A lot of effort going into minimizing the amount of data being used. It's major thread of research throughout the computer vision community.”

“In bin picking, we recently trained a neural network to determine shape and poses so it could pick one object at a time,” he said. “What is the thing—say it's a bunch of screws—where does each one begin and end? Our system surpassed the geometric model of the object and can look at a bin and pick one at a time.”

As with the development of autonomous vehicles that can safely navigate complex environments, robotic grasping is about learning to generalize, but it has different requirements.

“A robot is currently an expensive sorter, but it could be more flexible,” Sullivan acknowledged. “We're looking at objects that can fit in your hand. Unlike some of our competitors, we don't have robots that pick up cars.”

Accuracy and variability

MERL is focusing on improving robotic dexterity in manufacturing, said Nikovski. “Some of our biggest customers would benefit from 20-micron accuracy,” he said. “That capability is what they buy our robots for. For certain applications, like cellphone assembly, it makes more sense to create jigs and fixtures for huge production runs.”

“We'd like to extend this to much smaller companies, with higher-mix, lower-volume production,” he added. “One of our main types of customers is in assembly. In broad terms, any arrangement of parts in a configuration—such as in kitting, the food industry, or the medical industry—without human input is desirable for robotics.”

“The use of robots for materials handling is mostly solved, unless grasping is difficult, like bin picking,” Nikovski said. “A lot of customers are also interested in order fulfillment.”

MERL is working to improve how robots handle variability in shapes and sizes of food such as chicken nuggets or doughnuts, said Vetro.

“Being able to generalize is very important,” added Nikovski. “Restaurants are a prime example of high mix and low volume; just look at the menu. Such customization is not achievable with current robotics technology. You cannot really use approaches based on CAD models for food. Humans don't need geometric models, and machine learning is likely to help robots learn or estimate.”

“We're starting with a subset of the problem that's more manageable and makes more business sense,” said Vetro. “We'll refine how robots deal with soft items of variable size and shape, and then graduate to more challenging cases. We're actively working on that now and expect results to be delivered in the near term.”

Speaking of time frames, how long before machine learning for manipulation is sufficiently generalized for household robots?

“We don't expect robots to be able to, say, change a baby's diaper yet for another 10 or more years, but we're laying the foundation,” Vetro said.

About the Author

Follow Robotics 24/7 on Linkedin

Article topics

Email Sign Up